This HTML file addresses misconceptions about the reference genome of SARS-CoV-2 that have emerged among people who claim that viruses do not exist.

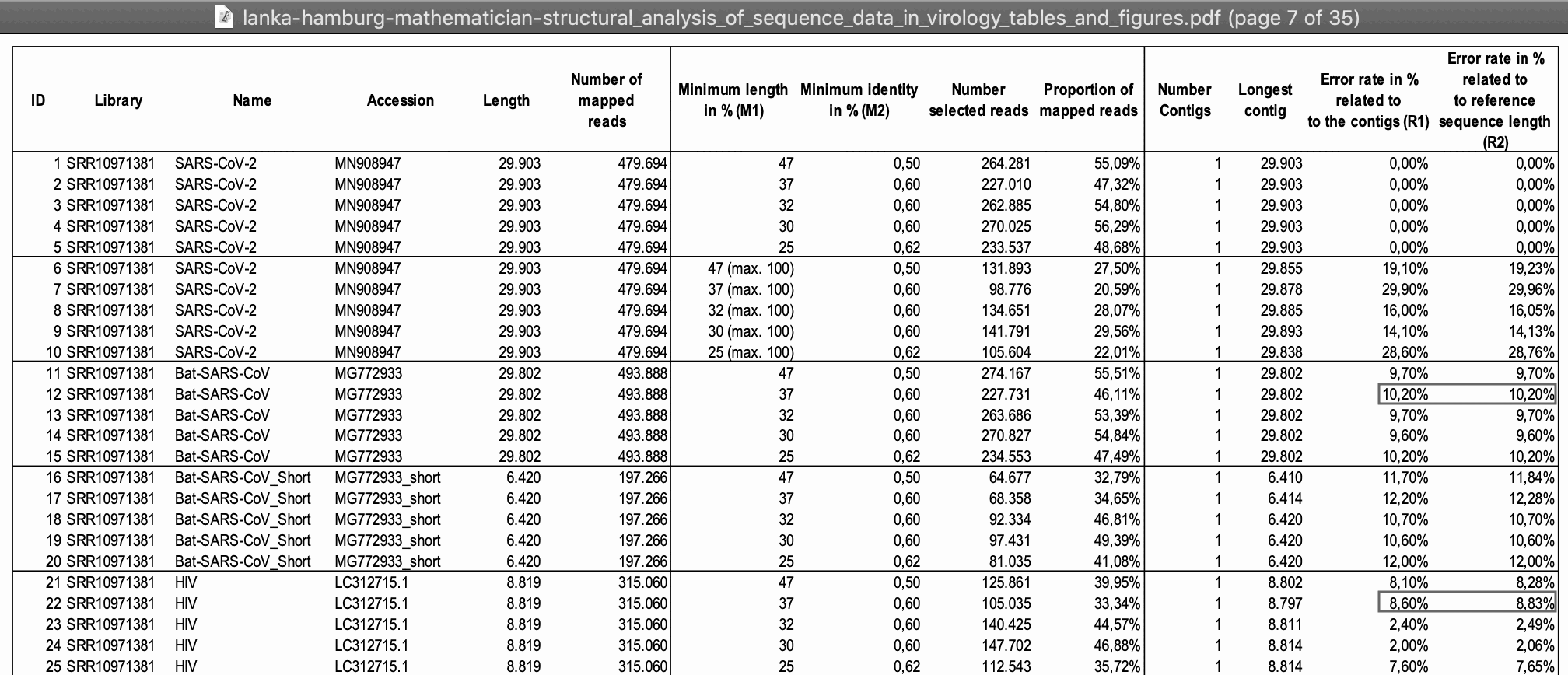

In the paper by Wu et al. where the Wuhan-Hu-1 reference genome was described, the authors wrote that when they did metagenomic sequencing for a sample of lung fluid from a COVID patient, they got a total of 384,096 contigs when they did de-novo assembly for the reads using MEGAHIT, and they used the longest contig as the initial reference genome of SARS2. Some no-virus people thought it meant that the other contigs were alternative candidates for the genome of SARS2, but actually the other contigs mostly consisted of fragments of the human genome or bacterial genomes. In Supplementary Table 2 which shows the best match on BLAST for the 50 most abundant contigs, the best match is bacterial for all other contigs except the contig for SARS2.

Wu et al.'s longest MEGAHIT contig happened to be the contig for SARS2 by chance, because there were no bacterial contigs longer than 30,000 bases even though one bacterial contig listed in Supplementary Table 1 was longer than 10,000 bases. When I ran MEGAHIT for Wu et al.'s raw reads, my second-longest contig was a 16,036-base contig for Leptotrichia hongkongensis and my third-longest contig was a 13,656-base contig for Veillonella parvula. However in other cases MEGAHIT will of course produce longer contigs for bacteria, and for example when I used MEGAHIT to assemble metagenomic reads of the bat sarbecovirus RmYN02, my longest contig was a contig for E. coli which was about 50,000 bases long, and the contig for RmYN02 was only the 4th-longest contig.

When I ran MEGAHIT Wu et al.'s raw reads, I got a total of about 30,000 contigs, but when I aligned my contigs against a set of about 15,000 virus reference sequences, I got only 8 contigs which matched viruses. I got only one contig which matched SARS2. And I got 4 contigs which matched the human endogenous retrovirus K113, but the contigs probably just came from the human genome since the sequences of HERVs are incorporated into the human genome. And I got one short contig which matched a Streptococcus phage and two short contigs which matched a parvovirus, both of which also had a handful of reads in the STAT results so they probably represent genuine matches to viruses. So among approximately 30,000 contigs, there may have been only 4 contigs which came from viruses. The reason why my number of contigs was an order of magnitude lower than the number reported by Wu et al. is probably because almost all human reads were masked with N bases in the reads uploaded to SRA, so my contigs included only a small number of contigs for fragments of the human genome.

About half of Wu et al.'s reads consist of only N bases, but it's probably human reads were masked because of NCBI's privacy policy. The NCBI has even published a command-line utility called Human Scrubber, which is used to replace human reads with N bases in raw reads that are submitted to the Sequence Read Archive. Wu et al. wrote that about 24 million reads out of about 57 million reads remained after they filtered out human reads, which roughly matches the reads at the SRA where there's about 27 million reads that consist of only N bases out of a total of about 57 million reads.

The first version of Wuhan-Hu-1 submitted to GenBank was 30,474 bases long but the current version is only 29,903 bases long. That's because the first version accidentally included a 618-base segment of human DNA at the 3' end, where the first 20 bases of the segment are part of both the actual genome of SARS2 and the human genome. The error was soon fixed in the second version of Wuhan-Hu-1 which was published at GenBank two days after the first version, and the error was even mentioned by Eddie Holmes on Twitter. I have found similar assembly errors in other sarbecovirus sequences, like RfGB02, Sin3408, Rs7907, Rs7931, GX-P1E, and Ra7909. So the reason why the original 30,474-base contig cannot be reproduced from the reads at the SRA is because almost all human reads were masked with N bases at SRA.

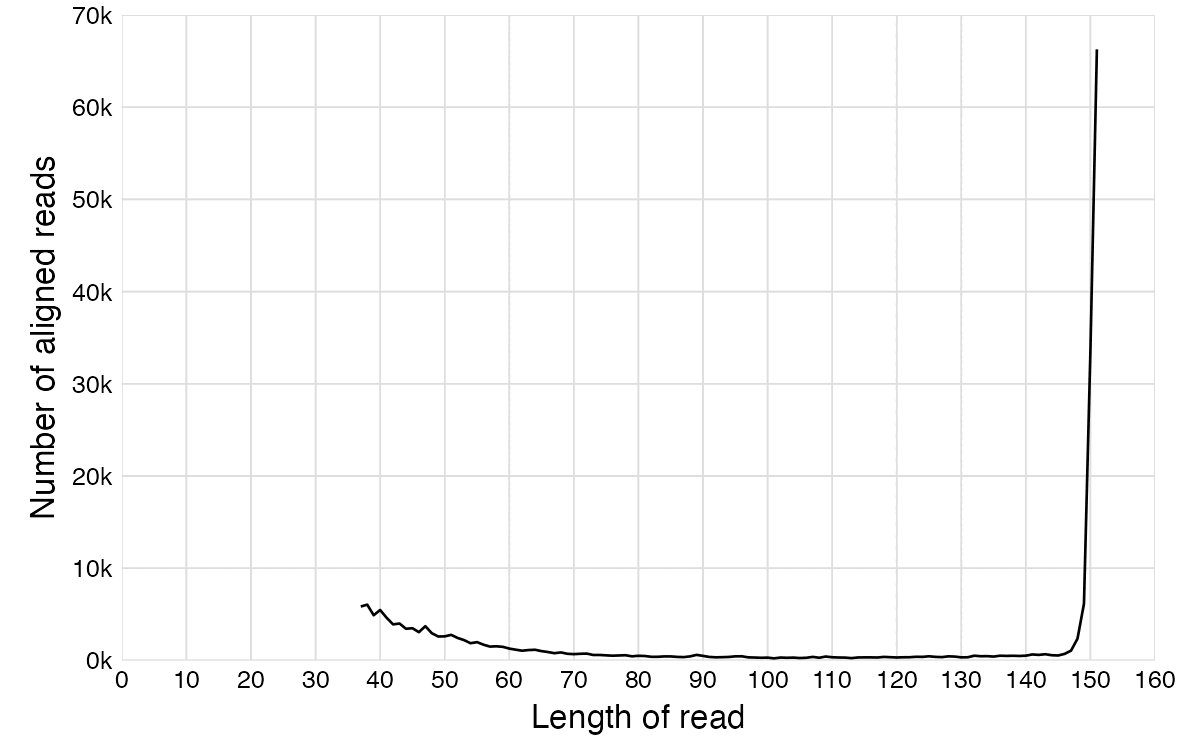

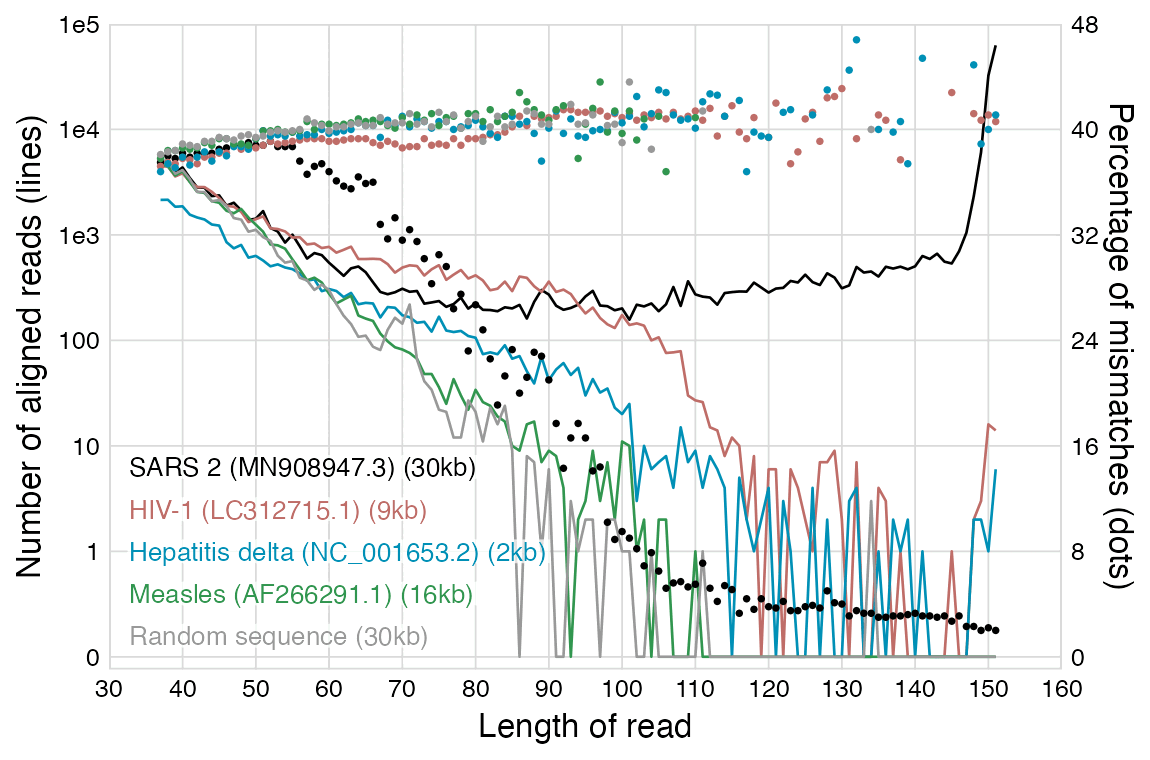

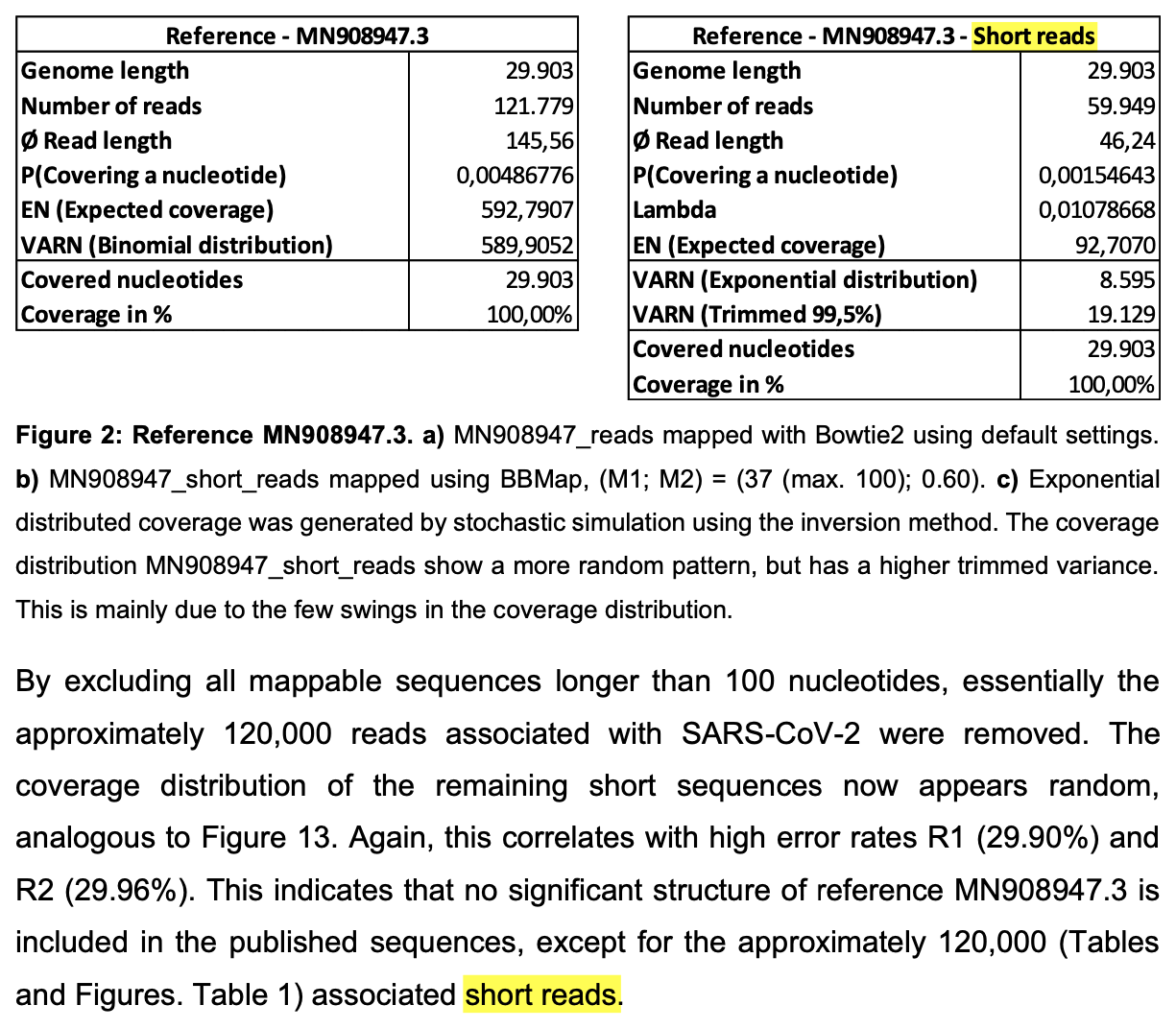

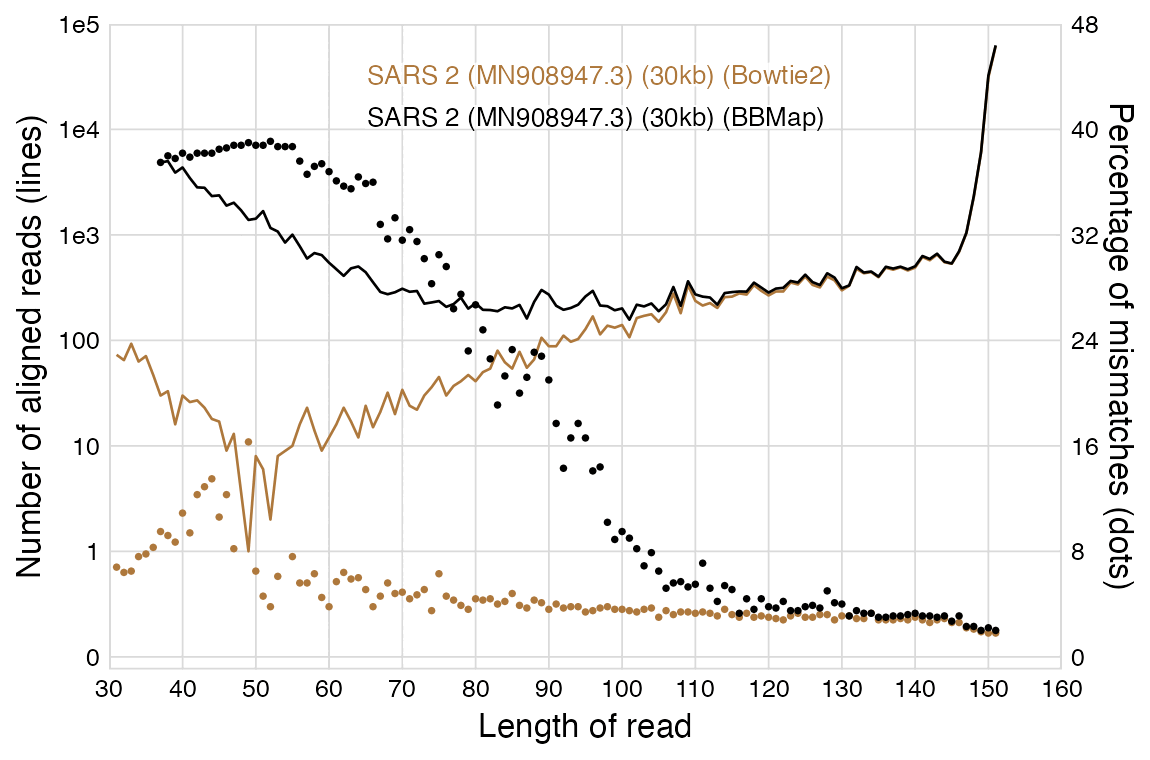

When the anonymous mathematician from Hamburg used BBMap to align Wu

et al.'s raw reads against the reference genomes of SARS2 and other

viruses, he got a large number of reads to align against the reference

genomes of HIV-1, measles, and hepatitis delta, but that's because he

ran BBMap with the parameter minratio=0.1 which used

extremely loose alignment criteria. Among the reads which aligned

against HIV-1, the average error rate was about 40% and the most common

read length 37 bases, but almost all of the reads would've remained

unaligned if he would've ran BBMap with the default parameters which

tolerate a much lower number of errors. The error rate was not mentioned

in his paper but I was able to calculate it by running his code myself.

However for the reads which aligned against SARS2, the most common

length was 151 bases and the average error rate of the 151-base reads

was only about 2%.

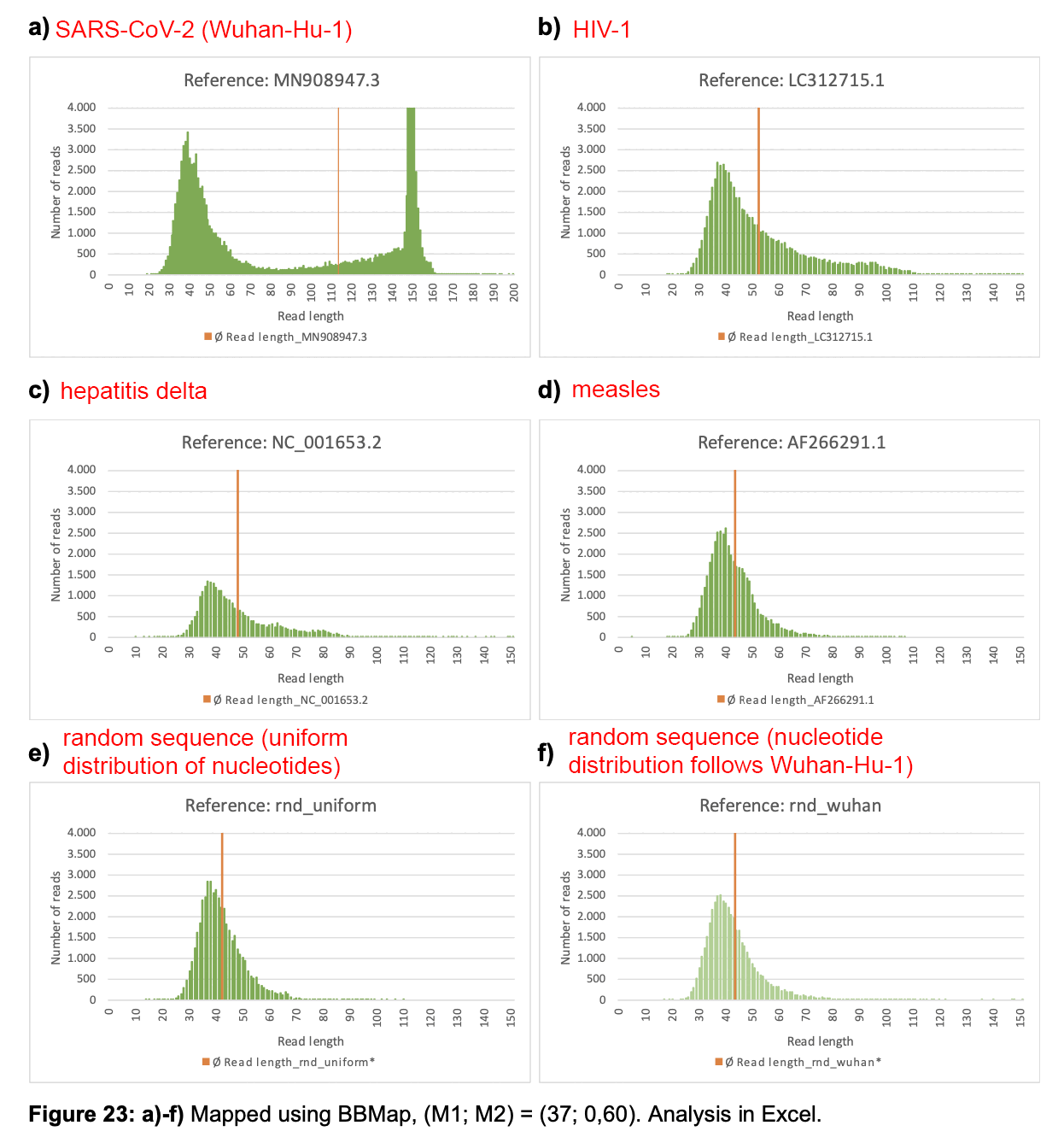

In the paper by the mathematician from Hamburg, there's plots for each virus reference sequence which show how many reads of each length aligned against the reference. The plot for SARS2 has a bimodal distribution with one peak around length 151 and another peak around length 35-40, but the plots for other viruses only have a single peak around length 35-40, which consists of reads which did not actually match the reference genome and which would have remained unaligned if he ran BBMap with the default parameters. But in the plot for SARS2, the y-axis is cut off in a deceptive way so you cannot see that the peak for reads around length 151 is much higher than the peaks around length 35-40 in the plots for other viruses.

Wu et al. wrote that the longest contig they got with Trinity was

only 11,760 bases long, but that's because Trinity split the genome of

SARS2 into multiple shorter contigs, which probably either overlapped or

had only small gaps in between. In order to merge the contigs, Wu et

al. could've aligned all contigs against a related virus like ZC45 or

SARS1, and they could've selected the most common base at each spot of

the alignment. And if any gaps remained, Wu et al. could've filled in

the gaps by using segments on either end of the gap as PCR primers and

by sequencing the PCR amplicons. By default Trinity and MEGAHIT both

require a region of a genome to be covered by at least two contigs

before the contigs get merged, so Wu et al. may have also gotten Trinity

to merge the shorter contigs into a single contig if they would've added

the option --min_glue 1 which reduces the minimum coverage

requirement from 2 to 1.

When I ran Trinity on Wu et al.'s raw reads without trimming, my longest contig was 29,879 bases long, and it was otherwise identical to the third version of Wuhan-Hu-1 except it included a 5-base insertion at the 5' end, it had one nucleotide change in the middle, and it was missing the last 29 bases of the poly(A) tail. When I ran Trinity again after trimming the reads, my longest contig was 31,241 bases long because there was a 1,379-base insertion in the middle which consisted of two segments that were identical to two different parts of Wuhan-Hu-1. The reason why Wu et al. failed to get a single complete contig with Trinity may have been because they used a different method to trim the reads, or because they used a different version of Trinity, or because Trinity was confused by the human reads which had been masked in my Trinity run.

In the current 29,903-base version of Wuhan-Hu-1, the poly(A) tail is 33 bases long, but the full poly(A) tail cannot be assembled from the metagenomic raw reads because the full poly(A) tail is not included in the raw reads. Wu et al. wrote that they sequenced the ends of the genome with RACE (rapid amplification of cDNA ends), which is a method similar to PCR which only requires specifying a single primer instead of two primers, so it can be used to amplify the end of a genome by specifying a single segment near the end as the primer. In October 2020 when the sequence of RaTG13 was updated at GenBank so that a new 15-base segment was added to the 5' end, some people from DRASTIC were suspecting fraud because the 15-base segment was not included in the metagenomic raw reads for RaTG13, but the segment was however included in the RACE amplicon sequences for RaTG13 which were only published later in 2021. But I believe the RACE amplicon sequences of Wuhan-Hu-1 have never been published.

When Wu et al. wrote that they determined the viral genome organization of WHCV by aligning it against SARS-CoV Tor2 and bat SL-CoVZC45, they meant that after they had already assembled the complete genome of SARS2, they aligned it against SARS1 and ZC45 in order to make annotating the proteins easier. So if for example they got one open reading frame that started around position 21,500 and ended around position 25,000, they knew that it was the spike protein if it covered roughly the same region as the spike protein of SARS1. But they did not mean that they aligned the raw reads of SARS2 against a SARS1 genome in order to do reference-based assembly.

USMortality did an experiment where he downloaded the reference genomes of various viruses, he generated simulated short reads for the genomes, and he tried to assemble the reads back together with MEGAHIT, but he failed to get complete contigs for a couple of viruses, like HKU1, HIV-1, HIV-2, and porcine adenovirus. But that's because the reference genome of HKU1 contains a region where the same 30-base segment is repeated 14 times in a row. And in HIV-1 and HIV-2 there's a long terminal repeat where a long segment at the 5' end of the genome is repeated at the 3' end of the genome. And porcine adenovirus contains a tandem repeat where the same 724-base segment is repeated twice in a row. Also when USMortality did another experiment where he mixed together simulated reads from multiple different viruses and he ran MEGAHIT on the mixed reads, he failed to get complete contigs for SARS2 and SARS1, but that's because the contigs were split at a spot where there's a 74-base segment that is identical in the reference genomes of SARS2 and SARS1, and when I repeated his experiment, I was able to get complete contigs for SARS2 and SARS1 when I simply increased the maximum k-value of MEGAHIT from 141 to 161.

One reason why USMortality says that the genome has not been validated is that in all of Wu et al.'s reads which align against the very start of the 5' end of Wuhan-Hu-1, there are 3-5 extra bases at the start of the read that usually end with one or more G bases. However the G bases are part of the template-switching oligo which anneals to complementary C bases that are added to the 5' end of the RNA strand by reverse transcriptase. USMortality also got two extra G bases at the start of one of his MEGAHIT contigs, but I was able to get rid of them by trimming 5 bases from the ends of reads.

The standard reference genome of SARS2 is known as Wuhan-Hu-1, and it was described in a paper by Wu et al. titled "A new coronavirus associated with human respiratory disease in China". [https://www.nature.com/articles/s41586-020-2008-3] There's currently three versions of Wuhan-Hu-1 at GenBank: MN908947.1 is 30,474 bases long and it was published on January 12th 2020, MN908947.2 is 29,875 bases long and it was published on January 14th 2020, and MN908947.3 is 29,903 bases long and it was published on January 17th 2020. [https://www.ncbi.nlm.nih.gov/nuccore/MN908947.3?report=girevhist] I believe the dates are in UTC and not in a local timezone.

Apart from a single nucleotide change in the N gene, the only differences between the three versions are at the beginning of the genome in the 5' UTR and at the end of the genome in the 3' UTR:

$ brew install mafft

[...]

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN908947.'{1,2,3}>wuhu.fa

$ mafft --globalpair --clustalout --thread 4 wuhu.fa

[...]

CLUSTAL format alignment by MAFFT G-INS-1 (v7.490)

MN908947.1 cggtgacgcatacaaaacattcccaccataccttcccaggtaacaaaccaaccaactttc

MN908947.2 cggtgacgcatacaaaacattcccaccataccttcccaggtaacaaaccaaccaactttc

MN908947.3 -----------attaaaggtt-----tataccttcccaggtaacaaaccaaccaactttc

*. *** .** .*********************************

[... 29,160 bases omitted]

MN908947.1 tgacctacacagctgccatcaaattggatgacaaagatccaaatttcaaagatcaagtca

MN908947.2 tgacctacacagctgccatcaaattggatgacaaagatccaaatttcaaagatcaagtca

MN908947.3 tgacctacacaggtgccatcaaattggatgacaaagatccaaatttcaaagatcaagtca

************ ***********************************************

[... 480 bases omitted (part of N gene, whole ORF10, part of 3' UTR)]

MN908947.1 aatgtgtaaaattaattttagtagtgctatccccatgtgattttaatagcttcttcctgg

MN908947.2 aatgtgtaaaattaattttagtagtgctatccccatgtgattttaatagcttctt-----

MN908947.3 aatgtgtaaaattaattttagtagtgctatccccatgtgattttaatagcttcttaggag

*******************************************************

MN908947.1 gatcttgatttcaacagcctcttcctcatactattctcaacactactgtcagtgaacttc

MN908947.2 ------------------------------------------------------------

MN908947.3 aatgacaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa---------------------

MN908947.1 taaaaatggattctgtgtttgaccgtaggaaacatttcagtattccttatctttagaaac

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 ctctgcaactcaagtgtctctgccaaagagtcttcaaggtaatgtcaaataccataacat

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 attttttaagtttttgtatacttcctaggaatatatgtcatagtatgtaaagaagatctg

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 attcatgtgatctgtactgttaagaaacacttcaaaggaaaacaacctctcatagatctt

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 caaatcctgttgttcttgagttggaatgtacaatattcaggtagagatgctggtacaaaa

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 aaagactgaatcatcaggctattagaaagataaactaggcctctaacatagtaactttaa

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 cagtttgtacttatacatattttcacattgaaatatagttttattcatgactttttttgt

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 tttagcttctctgtcttccattatttcaagctgctaaaaattaaaaatatcctatagcaa

MN908947.2 ------------------------------------------------------------

MN908947.3 ------------------------------------------------------------

MN908947.1 agggctatggcatctttttgtaaaaataaggaaagcaaggttttttgataatc

MN908947.2 -----------------------------------------------------

MN908947.3 -----------------------------------------------------

The first and second versions started with the 27-base segment

CGGTGACGCATACAAAACATTCCCACC which was changed to

ATTAAAGGTTT in the third version. If you remove the first 3

bases from CGGTGACGCATACAAAACATTCCCACC, then it's identical

to a 24-base segment that's included near the end of all three versions

Wuhan-Hu-1:

$ seqkit grep -nrp \\.1 wuhu.fa|seqkit subseq -r4:27|seqkit locate -f- wuhu.fa|cut -f1,4-|column -t seqID strand start end matched MN908947.1 + 4 27 TGACGCATACAAAACATTCCCACC MN908947.1 + 29360 29383 TGACGCATACAAAACATTCCCACC MN908947.2 + 4 27 TGACGCATACAAAACATTCCCACC MN908947.2 + 29360 29383 TGACGCATACAAAACATTCCCACC MN908947.3 + 29344 29367 TGACGCATACAAAACATTCCCACC

I have found similar assembly errors in several other sarbecovirus sequences, where there's a short segment at either end of the sequence which consists of another part of the genome or its reverse complement. For exaple in a sequence for the SARS1 isolate SoD, there's a 24-base segment at the 3' end which is the reverse complement of another 24-base segment near the 3' end:

$ curl 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=AY461660.1'>sod.fa $ seqkit locate -ip cacattagggctcttccatatagg sod.fa|cut -f4-|column -t strand start end matched + 29692 29715 cacattagggctcttccatatagg - 29629 29652 cacattagggctcttccatatagg $ seqkit subseq -r 29629:29652 sod.fa # the match on the minus strand is a reverse complement of the match on the plus strand >AY461660.1 SARS coronavirus SoD, complete genome CCTATATGGAAGAGCCCTAATGTG

Assembly errors like this are easy to spot if you do a multiple sequence alignment of related virus sequences, and you then look for inserts at the beginning or end of the alignment which are only included in one sequence but missing from all other sequences. And you can then do a BLAST search for the insert to find its origin.

The 3' end of the first version of Wuhan-Hu-1 accidentally included a 618-base segment of human DNA. [https://x.com/ChrisDeZPhD/status/1290218272705531905] The first 20 of the 618 bases are part of the actual genome of SARS2 which may have confused MEGAHIT. You can do a BLAST search for the 618-base segment by going here: https://blast.ncbi.nlm.nih.gov/Blast.cgi?PROGRAM=blastn. Then paste the sequence below to the big text field at the top and press the "BLAST" button:

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN908947.1'|seqkit subseq -r -618:-1 >MN908947.1 Wuhan seafood market pneumonia virus isolate Wuhan-Hu-1, complete genome tgtgattttaatagcttcttcctgggatcttgatttcaacagcctcttcctcatactatt ctcaacactactgtcagtgaacttctaaaaatggattctgtgtttgaccgtaggaaacat ttcagtattccttatctttagaaacctctgcaactcaagtgtctctgccaaagagtcttc aaggtaatgtcaaataccataacatattttttaagtttttgtatacttcctaggaatata tgtcatagtatgtaaagaagatctgattcatgtgatctgtactgttaagaaacacttcaa aggaaaacaacctctcatagatcttcaaatcctgttgttcttgagttggaatgtacaata ttcaggtagagatgctggtacaaaaaaagactgaatcatcaggctattagaaagataaac taggcctctaacatagtaactttaacagtttgtacttatacatattttcacattgaaata tagttttattcatgactttttttgttttagcttctctgtcttccattatttcaagctgct aaaaattaaaaatatcctatagcaaagggctatggcatctttttgtaaaaataaggaaag caaggttttttgataatc

The best match is 616 bases long because it's missing the last 2 bases of the query, but 614 out of 616 bases are identical with a result titled "Human DNA sequence from clone RP11-173E24 on chromosome 1q31.1-31.3, complete sequence".

I have also found other sarbecovirus sequences where a piece of host DNA or rRNA was accidentally included at either end of the genome. For example in a sequence for the SARS-like bat virus Rs7907, I noticed that the 5' end had an insertion which was missing from other SARS-like viruses:

$ curl https://sars2.net/f/sarbe.fa.xz|xz -dc>sarbe.fa

$ seqkit fx2tab sarbe.fa|grep -Ev 'Rs7931|Ra7909'|grep -C10 Rs7907|awk -F\\t '{print$1 FS substr($2,1,550)}'|seqkit tab2fx|mafft --globalpair --quiet -|seqkit fx2tab|awk -F\\t '{print $2 FS$1}'|cut -d, -f1|column -ts$'\t'

a----------------------------------------------------------------taaaaggattcatccttccc---- MT726044.1 Bat coronavirus isolate PREDICT/PDF-2370/OTBA35RSV

a----------------------------------------------------------------taaaaggattcatccttccc---- MT726043.1 Sarbecovirus sp. isolate PREDICT/PDF-2386/OTBA40RSV

-----------------------------------------------------------------taaaaggattaatccttccc---- KY352407.1 Severe acute respiratory syndrome-related coronavirus strain BtKY72

----------------------------------------------------------------------------------------- NC_014470.1 Bat coronavirus BM48-31/BGR/2008

----------------------------------------------------------------------------------------- GU190215.1 Bat coronavirus BM48-31/BGR/2008

----------------------------------------------------------------------------------------- MZ190137.1 Bat SARS-like coronavirus Khosta-1 strain BtCoV/Khosta-1/Rh/Russia/2020

----------------------------------------------------------------------------------------- OL674081.1 Severe acute respiratory syndrome-related coronavirus isolate Rs7952

----------------------------------------------------------------------------------------- OL674074.1 Severe acute respiratory syndrome-related coronavirus isolate Rs7896

-----------------------------------------------------------------------------accttcccaggt OL674075.1 Severe acute respiratory syndrome-related coronavirus isolate Rs7905

-------------------------------------------------------------------------------cttcccaggt OL674079.1 Severe acute respiratory syndrome-related coronavirus isolate Rs7924

ggggattgcaattattccccatgaacgaggaattcccagtaagtgcgggtcataagcttgcgttgattaagtccctgccctttgtacac OL674076.1 Severe acute respiratory syndrome-related coronavirus isolate Rs7907

------------------------------------------------------------------------------ccttcccaggt OL674078.1 Severe acute respiratory syndrome-related coronavirus isolate Rs7921

a----------------------------------------------------------------ttaaaggtttttaccttcccaggt MZ081380.1 Betacoronavirus sp. RsYN04 strain bat/Yunnan/RsYN04/2020

a----------------------------------------------------------------ttaaaggtttttaccttcccaggt MZ081378.1 Betacoronavirus sp. RmYN08 strain bat/Yunnan/RmYN08/2020

a----------------------------------------------------------------ttaaaggtttttaccttcccaggt MZ081376.1 Betacoronavirus sp. RmYN05 strain bat/Yunnan/RmYN05/2020

When I did a BLAST search for the first 150 bases of the Rs7907 sequence, the first 96 bases were 100% identical with several sequences of mammalian DNA or rRNA, like for example there were results titled "PREDICTED: Pan troglodytes 18S ribosomal RNA (LOC129143085), rRNA" and "Delphinus delphis genome assembly, chromosome: 21".

In the paper where Rs7907 was published, the authors didn't describe removing host reads before they did de-novo assembly: "For SARSr-CoV-positive RNA extraction, next-generation sequencing (NGS) was performed using BGI MGISEQ 2000. NGS reads were first processed using Cutadapt (v.1.18) to eliminate possible contamination. Thereafter, the clean reads were assembled into genomes using Geneious (v11.0.3) and MEGAHIT (v1.2.9). PCR and Sanger sequencing were used to fill the genome gaps. To amplify the terminal ends, a SMARTer RACE 5'/3'kit (Takara) was used." [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8344244/] (However I don't know if they did RACE for the 5' tail of Rs7907, because they would've noticed the assembly error in their contig if they would've incorporated the RACE sequences into their contig, or maybe they just published their MEGAHIT contig without combining it with the RACE sequences.)

The assembly error in the first version of Wuhan-Hu-1 was also mentioned by Eddie Holmes, who tweeted that "Zhang's sequence did have some human sequences at the ends which we quickly fixed". [https://x.com/edwardcholmes/status/1643722487333789696] And in an email to USMortality, Eddie Holmes also wrote: "I do know that the initial upload to Virological/GenBank had some human DNA at the 5' and 3' ends that was then corrected." [https://usmortality.substack.com/p/why-do-wu-et-al-2020-refuse-to-answer] However the insert at the 5' end did not actually come from the human genome but from another part of the genome of SARS2.

When the middle part of an RNA sequence is known but it's not certain if the ends of the sequence are correct, the ends can be sequenced accurately using method called RACE (rapid amplification of cDNA ends). RACE is also known as "one-sided PCR" or "anchored PCR", and it basically requires only specifying a single primer for each amplified segment instead of a forward and reverse primer like in regular PCR. [https://en.wikipedia.org/wiki/Rapid_amplification_of_cDNA_ends]

In the third version of Wuhan-Hu-1 at GenBank, an additional 44 bases were inserted to the 3' end so that the poly(A) tail became 33 bases long. I believe it's because the ends of the genome were sequenced with RACE, because the paper by Wu et al. said: "The genome sequence of this virus, as well as its termini, were determined and confirmed by reverse-transcription PCR (RT-PCR)10 and 5'/3' rapid amplification of cDNA ends (RACE), respectively." [https://www.nature.com/articles/s41586-020-2008-3]

Supplementary Table 6 shows the primers used to do RACE, where on each row the number in parentheses is the expected length of the amplicon which extends from the primer until the end of the genome: [https://static-content.springer.com/esm/art%3A10.1038%2Fs41586-020-2008-3/MediaObjects/41586_2020_2008_MOESM1_ESM.pdf]

| D. Primers used in 5'/3' RACE | |||

| 5-GSP | CCACATGAGGGACAAGGACACCAAGTG | 573-599 (599 bp) | |

| 5-GSPn | CATGACCATGAGGTGCAGTTCGAGC | 491-515 (515 bp) | |

| 3-GSP | TGTCGCGCATTGGCATGGAAGTCACACC | 29212-29239 (688 bp) | |

| 3-GSPn | CTCAAGCCTTACCGCAGAGACAGAAG | 29398-29423 (502 bp) | |

In 2020, Steven Quay posted a tweet where he wrote: "Dr. Shi lied? Below is NEW RaTG13 sequence https://ncbi.nlm.nih.gov/nuccore/MN996532 with the missing 1-15 nt at 5' end. So I blasted 1-180 of this new sequence against her RNA-Seq for RaTG13 to find the missing 15 nt. But they are not there. So 1-15 nt appears FABRICATED by Dr. Shi & WIV." [https://x.com/quay_dr/status/1318041151211884552] Actually the RACE sequences of RaTG13 were only added to SRA in December 2021 after Quay's tweet, but one of them matched the segment of 15 nucleotides which was added to the start of the second version of RaTG13: [https://www.ncbi.nlm.nih.gov/sra/?term=SRR16979454]

$ fastq-dump SRR16979454 # RACE sequences of RaTG13

$ curl 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN996532.'{1,2}|mafft ->ratg.fa # first and second version of RaTG13

[...]

$ aldi2(){ cat "$@">/tmp/aldi;Rscript --no-init-file -e 'm=t(as.matrix(Biostrings::readAAStringSet("/tmp/aldi")));diff=which(rowSums(m!=m[,1])>0);split(diff,cumsum(diff(c(-Inf,diff))!=1))|>sapply(\(x)c(if(length(x)==1)x else paste0(x[1],"-",tail(x,1)),apply(m[x,,drop=F],2,paste,collapse="")))|>t()|>write.table(quote=F,sep=";",row.names=F)';}

;MN996532.1 Bat coronavirus RaTG13, complete genome;MN996532.2 Bat coronavirus RaTG13, complete genome

1-15;---------------;attaaaggtttatac

2125;c;t

2132;c;a

6936;c;a

7127;g;a

18812-18813;gc;tg

29856-29870;aaaaaaaaaaaaaaa;---------------

$ seqkit locate -irp attaaaggtttatac SRR16979454.fastq|column -ts$'\t' # search RACE sequences for the 15-base segment that was added to the start of the second version of RaTG13

seqID patternName pattern strand start end matched

SRR16979454.4 attaaaggtttatac attaaaggtttatac - 461 475 ATTAAAGGTTTATAC

As far as I know, the RACE sequences used by Wu et al. were never published, but I guess they match a longer part of the poly(A) tail than the metagenomic reads which only included a few bases of the poly(A) tail.

All five BANAL viruses are also missing parts of the 5' UTR like the first version of RaTG13:

$ curl -sL sars2.net/f/sarbe.fa.xz|xz -dc>sarbe.fa

$ wget sars2.net/f/sarbe.pid

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN996532.1'|sed '1s/.*/>RaTG13_version_1_no_RACE/'>ratg1.fa

$ awk -F\\t 'NR==1{for(i=2;i<=NF;i++)if($i~/Wuhan-Hu-1/)break;next}$i>93{print$1}' sarbe.pid|cut -d\ -f1|seqkit grep -f- sarbe.fa|cat - ratg1.fa|seqkit subseq -r1:1000|mafft --quiet --thread 4 -|seqkit subseq -r 1:60|seqkit fx2tab|sed $'s/.* \\(.*\\),[^\t]*/\\1/'|column -t

Wuhan-Hu-1 attaaaggtttataccttcccaggtaacaaaccaaccaactttcgatctcttgtagatct

BANAL-20-236/Laos/2020 -ttaaaggtttataccttcccaggtaacaaaccaaccaactctcgatctcttgtagatct

BANAL-20-103/Laos/2020 -----------------------------------------ctcgaattcttgtagatct

BANAL-20-52/Laos/2020 ------------------------taacaaaccaaccaactttcgatctcttgtagatct

RaTG13 attaaaggtttatacctttccaggtaacaaaccaacgaactctcgatctcttgtagatct

bat/Yunnan/RpYN06/2020 attaaaggtttataccttcccaggtaacaaaccaaccaaccctcgatctcttgtagatct

BANAL-20-247/Laos/2020 --------------------------------------actctcgatctcttgtagatct

BANAL-20-116/Laos/2020 ------------------------------------------------------------

RaTG13_version_1_no_RACE ---------------ctttccaggtaacaaaccaacgaactctcgatctcttgtagatct

When I did local alignment of the metagenomic reads for RaTG13 against the second version of RaTG13 at GenBank, the minimum starting position among the aligned reads was only 15:

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN996532'>ratg13.fa

$ parallel-fastq-dump --gzip --threads 10 --split-files --sra-id SRR11085797

[...]

$ minimap2 -a --sam-hit-only ratg13.fa SRR11085797_[12].fastq.gz|awk '$10=="*"{$10=x}$11=="*"{$11=y}{x=$10;y=$11}1' {,O}FS=\\t|samtools sort -@3 ->ratg13.bam

[...]

$ samtools view ratg13.bam|head -n4|cut -f1,2,4,6,10,12|tr \\t \|

SRR11085797.8084709|0|15|151M|CCTTTCCAGGTAACAAACCAACGAACTCTCGATCTCTTGTAGATCTGTTCTCTAAACGAACTTTAAAATCTGTGTGACTGTCACTCGGCTGCATGCTTAGTGCACTCACGCAGTATAATTAATAACTAATTACTGTCGTTGACAGGACACG|NM:i:0

SRR11085797.8811160|0|16|150M|CTTTCCAGGTAACAAACCAACCAACTCTCGATCTCTTGTAGATCTGTTCTCTAAACGAACTTTAAAATCTGTGTGACTGTCACTCGGCTGCATGCTTAGTGCACTCACGCAGTATAATTAATAACTAATTACTGTCGTTTACAGGACACG|NM:i:2

SRR11085797.8084709|16|18|150M|TTCCAGGTNACAAACCAACGAACTCTCGATCTCTTGTAGATCTGTTCTCTAAACGAACTTTAAAATCTGTGTGACTGTCACTCGGCTGCATGCTTAGTGCACTCACGCAGTATAATTAATAACTAATTACTGTCGTTGACAGGACACGAG|NM:i:1

SRR11085797.3139935|0|25|143M|TAACAAACCAACCAACTCTCGATCTCTTGTAGATCTGTTCTCTAAACGAACTTTAAAATCTGTGTGACTGTCACTCGGCTGCATGCTTAGTGCACTCACGCAGTATAATTAATAACTAATTACTGTCGTTGACAGGACACGAG|NM:i:1

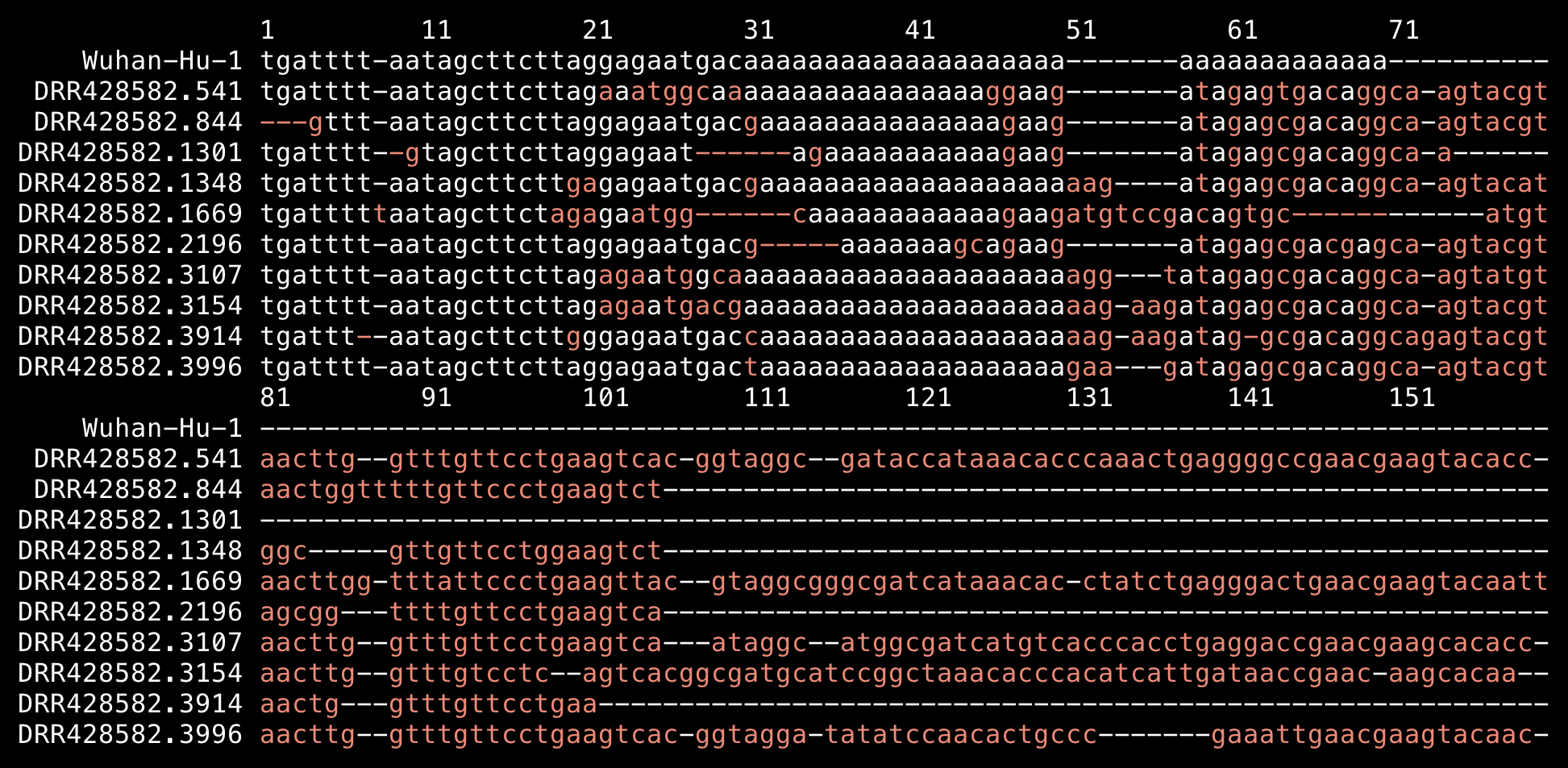

The third version of Wuhan-Hu-1 has a 33-base poly(A) tail, but I think they sequenced the tail with RACE because there's only a few bases of the tail included in the metagenomic reads published at the SRA. The output below shows reads that aligned against the end of the third version of Wuhan-Hu-1. To save space, I only included one read per each starting position even though many positions have over ten aligned reads. The first column shows the starting position and the second column shows the number of mismatches relative to Wuhan-Hu-1:

$ brew install bowtie2 fastp samtools

[...]

$ wget ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR109/081/SRR10971381/SRR10971381_{1,2}.fastq.gz

[...]

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN908947.3'>sars2.fa

$ bowtie2-build sars2.fa{,}

[...]

$ fastp -i SRR10971381_1.fastq -I SRR10971381_2.fastq -o SRR10971381_1.trim.fq.gz -O SRR10971381_2.trim.fq.gz -l 70

[...]

$ bowtie2 -p3 -x sars2.fa -1 SRR10971381_1.trim.fq.gz -2 SRR10971381_2.trim.fq.gz --no-unal|samtools sort -@2 ->sorted58.bam

[...]

$ samtools view sorted58.bam|awk -F\\t '!a[$4]++{x=$4;y=$10;sub(/.*NM:i:/,"");print x,$1,y}'|tail -n30

29763 1 AATGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAG

29767 2 TTCAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAA

29769 8 GGCTGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCGTCTCTTTAGA

29771 4 GGTCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCAA

29772 2 TTTTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAA

29773 1 GTTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGA

29775 2 TATGGAGAGCTGCCTATATGGAAGAGCCCTAATGTATAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGACA

29776 6 TTGGAGAGCTGCCTATATGGCAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGATGC

29777 2 ATGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAG

29780 1 AGTGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGG

29781 4 AAACTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCGCATGTGATTTTAATAGCTTCTTAGGAGAATAACA

29791 1 TGTGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAA

29855 8 CTGTTAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29857 8 AATAAAAAAAACAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29858 8 AAAAAAAAAACTCAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29859 6 AAAAAGAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29860 7 AAATAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29861 6 AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29862 3 AAAAATCACAAAAAAAAAAAAAAAAAAAAAAAA

29863 4 AAAAAAATAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29864 4 AAACAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29865 1 AATGAAAAAAAAAAAAAAAAAAAAAAAAAAA

29866 3 AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29867 4 AAAAAAAAAATAAAAAAAAAAAAAAAAAAAAAAAAAA

29868 2 AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29869 1 AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29870 1 AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29871 0 AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29872 1 AATAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

29873 1 AAAAAATAAAAAAAAAAAAAAAAAAAAAAAA

In the output above, there's a huge gap between positions 29,791 and 29,855 with no reads starting from the 63 positions in between. I think it's because the reads after the gap don't actually come from SARS2 but from some other organisms.

The last 50 bases of Wuhan-Hu-1 before the poly(A) tail are

ATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGAC, which

is matched in the by the reads above the gap within a couple of

mismatches. But the first read below the gap starts with

CTGTT which has three nucleotide changes from

ATGAC at the corresponding position in Wuhan-Hu-1.

Out of the reads whose starting position is 29,700 or above, there's

only one read which has five A bases after the sequence

ATGAC:

$ samtools view sorted58.bam|awk '$4>=29700'|cut -f10|grep -Eo ATGACA+|sort|uniq -c|sort -n

1 ATGACAAAAA

2 ATGACAAA

2 ATGACAAAA

8 ATGACA

I'm not sure which of the dates above are UTC and which are local time.

In Supplementary Table 8 of Wu et al. 2020, there's a list of the PCR

primers they used to do PCR-based sequencing in order to verify the

results of the metagenomic de-novo assembly they did with MEGAHIT.

[https://static-content.springer.com/esm/art%3A10.1038%2Fs41586-020-2008-3/MediaObjects/41586_2020_2008_MOESM1_ESM.pdf]

The last reverse primer is AAAATCACATGGGGATAGCACTACT which

is shown to end at position 29,864, so I guess I guess by the time they

designed the primers, they had already noticed that they accidentally

included a piece of human DNA at the beginning of the first version of

Wuhan-Hu-1 at GenBank. The first forward primer is

CCAGGTAACAAACCAACCAACTT and it's shown to start at position

36, which matches the coordinates in the first and second version of

Wuhan-Hu-1 but not the third version, so I guess they designed the

primers based on the second version. However another part of

Supplementary Table 8 says that the "primers for

WHCV detection using qPCR" were "designed

based on the whole genome of WHCV (MN908947.3)", and the

coordinates of the primers match the third version of Wuhan-Hu-1 at

GenBank and not the second version. So I guess Wu et al. designed

different sets of primers based on different versions of the genome.

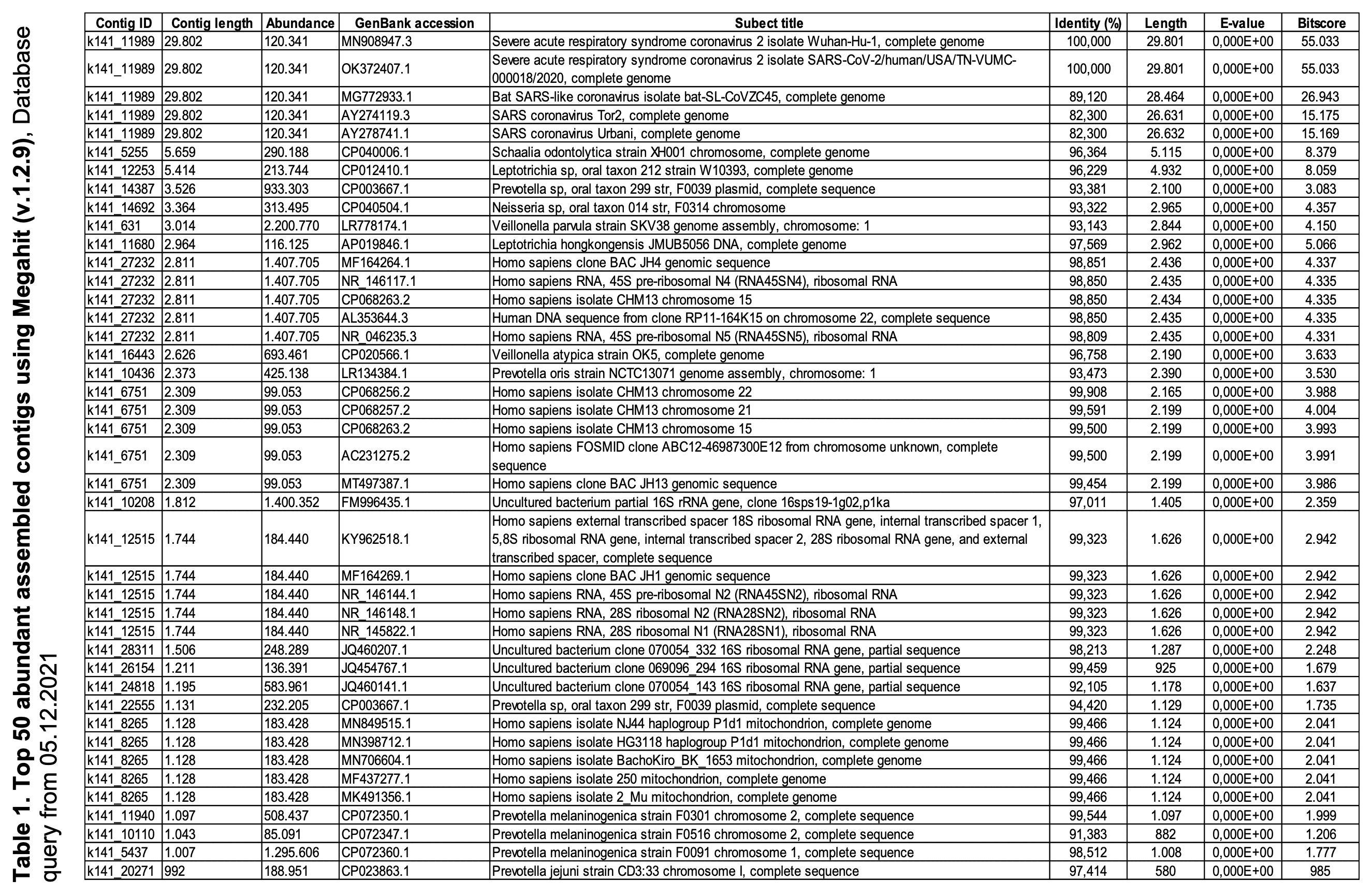

Below I ran MEGAHIT for Wu et al.'s lung metagenomic reads. When I

did trimming with fastp, my longest contig was 29,802 bases

long and it missed the last 102 bases of MN908947.3 and had an insertion

of one base at the beginning. When I did trimming with

trimmomatic, my longest contig was 29,875 bases long and

now it only missed the last 30 bases of MN908947.3 even though it still

had the single-base insertion at the beginning:

brew install megahit -s # `-s` compiles from source because the bottle was compiled against GCC 9 so it gave me the error `dyld: Library not loaded: /usr/local/opt/gcc/lib/gcc/9/libgomp.1.dylib`

wget ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR109/081/SRR10971381/SRR10971381_{1,2}.fastq.gz # download from ENA because it's faster than downloading from SRA and doesn't require installing sratoolkit

fastp -i SRR10971381_1.fastq.gz -I SRR10971381_2.fastq.gz -o SRR10971381_1.trim.fq.gz -O SRR10971381_2.trim.fq.gz -l 70

megahit -1 SRR10971381_1.trim.fq.gz -2 SRR10971381_2.trim.fq.gz -o megahutrim

brew install trimmomatic

trimmomatic PE SRR10971381_{1,2}.fastq.gz SRR10971381_{1,2}.paired.fq.gz SRR10971381_{1,2}.unpaired.fq.gz AVGQUAL:20 HEADCROP:12 LEADING:3 TRAILING:3 MINLEN:75 -threads 4 # adapter trimming was omitted here

megahit -1 SRR10971381_1.paired.fq.gz -2 SRR10971381_2.paired.fq.gz -o megahutrimmo

The paper by Wu et al. says: "In total, we generated 56,565,928 sequence reads that were de novo-assembled and screened for potential aetiological agents. Of the 384,096 contigs assembled by Megahit [9], the longest (30,474 nucleotides (nt)) had a high abundance and was closely related to a bat SARS-like coronavirus (CoV) isolate - bat SL-CoVZC45 (GenBank accession number MG772933) - that had previously been sampled in China, with a nucleotide identity of 89.1% (Supplementary Tables 1, 2).". And 56,565,928 is identical to the number of reads in the SRA run deposited by Wu et al.. [https://www.ncbi.nlm.nih.gov/sra/?term=SRR10971381] And 30,474 is identical to the length of the first version of Wuhan-Hu-1 at GenBank.

However when I tried aligning the reads in Wu et al.'s metagenomic dataset against the first version of Wuhan-Hu-1, I got zero coverage for the last 579 bases, and the following were the reads with the highest ending position:

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN908947.1'>wuhu1.fa

$ bowtie2-build wuhu1.fa{,}

[...]

$ bowtie2 -p3 -x wuhu1.fa -1 SRR10971381_1.fastq.gz -2 SRR10971381_2.fastq.gz --no-unal|samtools sort -@2 ->sorted59.bam

[...]

$ samtools view sorted59.bam|ruby -alne'puts [$F[3],$F[3].to_i+$F[5].scan(/\d+(?=[MD])/).map(&:to_i).sum,$F[5],$F.grep(/NM:i/)[0][/\d+/],$F[9]]*" "'|sort -nk2|tail|column -t

29791 29887 96M 9 CGGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGTC

29797 29888 91M 12 AAACTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCGCATGTGATTTTAATAGCTTCTTAGGAGAATAACA

29766 29889 118M2I5M 13 GGCTCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATCTGATTTTAATAGCTTCTTAGGAGAATGACAAAA

29736 29890 139M3D12M 8 ACCACATTTTCACCGAGGCCACGCGGAGTACGATCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGAC

29736 29890 139M3D12M 8 ACCACATTTTCACCGAGGCCACGCGGAGTACGATCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGAC

29736 29890 139M3D12M 8 ACCACATTTTCACCGAGGCCACGCGGAGTACGATCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGAC

29752 29890 132M2I6M 10 GGCCACGCGGAGTACGATCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGACAAAAT

29791 29890 99M 11 TAAGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGACAAA

29766 29891 125M 13 TTATCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGAGAAAA

29752 29895 123M3D17M 13 TGACACGCGGAGTACGATCGAGTGTACAGTGAACAATGCTAGGGAGAGCTGCCTATATGGAAGAGCCCTAATGTGTAAAATTAATTTTAGTAGTGCTATCCCCATGTGATTTTAATAGCTTCTTAGGAGAATGACAAAAA

In Wu et al.'s metagenomic dataset, about half of the reads have been

masked entirely so that they consist of only N bases:

$ seqkit grep -rsp '^N+$' SRR10971381_1.fastq.gz|seqkit head -n1 @SRR10971381.2 2 length=151 NNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNN + !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! $ seqkit grep -rsp '^N+$' SRR10971381_1.fastq|seqkit stat # out of a total of about 28 million reads, about 14 million reads consist of only N letters file format type num_seqs sum_len min_len avg_len max_len - FASTQ DNA 13,934,971 2,027,338,447 35 145.5 151

So it could be that by the time Wu et al. submitted the reads to SRA, they had noticed that their initial 30,474-base contig included a segment of human DNA at the end, so maybe they replaced the reads which matched human DNA with reads that consisted of only N letters. That would explain why the number of reads at SRA is the same as the number of reads which produced their 30,474-base contig but why the contig cannot be reproduced. But it could be that at the point when they noticed the assembly error in MN908947.1, they were no longer able to update the main text of their paper in Nature, which might explain why the assembly error in MN908947.1 was accounted for in Supplementary Table 8 but not in the main text of the paper.

Wu et al. wrote that about 24 million reads out of about 57 million reads remained after human reads were filtered out:

Sequencing reads were first adaptor and quality trimmed using the Trimmomatic program[32]. The remaining 56,565,928 reads were assembled de novo using both Megahit (v.1.1.3)[9] and Trinity (v.2.5.1)[33] with default parameter settings. Megahit generated a total of 384,096 assembled contigs (size range of 200-30,474 nt), whereas Trinity generated 1,329,960 contigs with a size range of 201-11,760 nt. All of these assembled contigs were compared (using BLASTn and Diamond BLASTx) against the entire non-redundant (nr) nucleotide and protein databases, with e values set to 1 × 10−10 and 1 × 10−5, respectively. To identify possible aetiological agents present in the sequencing data, the abundance of the assembled contigs was first evaluated as the expected counts using the RSEM program[34] implemented in Trinity. Non-human reads (23,712,657 reads), generated by filtering host reads using the human genome (human release 32, GRCh38.p13, downloaded from Gencode) by Bowtie2[35], were used for the RSEM abundance assessment.

However in the STAT analysis for the reads uploaded to SRA, only

about 0.1% of the reads match Homo sapiens, about 0.8% of the

reads match simians, and about 4.6% of the reads match eukaryotes.

There's also an unusually low percentage of only 39% identified reads,

because the half of reads which consist of only N bases are

unidentified:

$ curl -s 'https://trace.ncbi.nlm.nih.gov/Traces/sra-db-be/run_taxonomy?cluster_name=public&acc=SRR10971381'>SRR10971381.stat $ jq -r '.[].tax_totals.total as$tot|.[].tax_table|sort_by(-.total_count)[]|(100*.total_count/$tot|tostring)+";"+.org' SRR10971381.stat|egrep 'Eukaryota|Simiiformes|Homo sapiens' 4.591841930004224;Eukaryota 0.8129416704698984;Simiiformes 0.1192095708215023;Homo sapiens $ jq -r '.[].tax_totals|100*.identified/.total' SRR10971381.stat 39.12950566284354

In order to compare the results to other human BALF samples from

patients with pneumonia, I searched the SRA for the phrase

bronchoalveolar pneumonia metagenomic human. In one run

which was part of a study titled "Bronchoalveolar

Lavage Fluid Metagenomic Next-Generation Sequencing in non-severe and

severe Pneumonia", about 86% of the reads were identified and 76%

matched simians:

[https://www.ncbi.nlm.nih.gov/sra/?term=SRR22183690]

$ curl -s 'https://trace.ncbi.nlm.nih.gov/Traces/sra-db-be/run_taxonomy?cluster_name=public&acc=SRR22183690'>SRR22183690.stat $ jq -r '.[].tax_totals.total as$tot|.[].tax_table|sort_by(-.total_count)[]|(100*.total_count/$tot|tostring)+";"+.org' SRR22183690.stat|egrep 'Eukaryota|Simiiformes|Homo sapiens' 81.79456158724525;Eukaryota 75.61440435123265;Simiiformes 12.85625010794863;Homo sapiens $ jq -r '.[].tax_totals|100*.identified/.total' SRR22183690.stat 86.16966070371517

In an early metagenomic sequencing run for SARS2 titled "Total RNA sequencing of BALF (human reads removed)", only about 0.6% of reads matched simians. The run was submitted by Wuhan University and published on January 18th 2020, and I didn't find any SARS2 run on SRA with an earlier publication date. [https://www.ncbi.nlm.nih.gov/bioproject/PRJNA601736] About 13% of the reads were masked so that they consisted of only N bases:

$ curl -s 'https://trace.ncbi.nlm.nih.gov/Traces/sra-db-be/run_taxonomy?cluster_name=public&acc=SRR10903402'>SRR10903402.stat $ jq -r '.[].tax_totals.total as$tot|.[].tax_table|sort_by(-.total_count)[]|(100*.total_count/$tot|tostring)+";"+.org' *02.stat|egrep 'Eukaryota|Simiiformes|Homo sapiens' 1.435508516404756;Eukaryota 0.5964291097600983;Simiiformes $ jq -r '.[].tax_totals|100*.identified/.total' SRR10903402.stat 71.32440955587016 $ seqkit grep -srp '^N*$' SRR10903402_1.fastq.gz|seqkit head -n1 @SRR10903402.1 1 length=151 NNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNN + !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! $ seqkit seq -s SRR10903402_[12].fastq.gz|grep '^N*$'|wc -l 177770 $ seqkit seq -s SRR10903402_[12].fastq.gz|wc -l 1353388

Human reads may have been masked for ethical reasons, since the SRA's website says: "Human metagenomic studies may contain human sequences and require that the donor provide consent to archive their data in an unprotected database. If you would like to archive human metagenomic sequences in the public SRA database please contact the SRA and we will screen and remove human sequence contaminants from your submission." [https://www.ncbi.nlm.nih.gov/sra/docs/submit/]

The NCBI has a tool called sra-human-scrubber which is

used to mask human reads with N bases in SRA submissions:

[https://ncbiinsights.ncbi.nlm.nih.gov/2023/02/02/scrubbing-human-sequences-sra-submissions/]

The Human Read Removal Tool (HRRT; also known as the Human Scrubber) is available on GitHub and DockerHub. The HRRT is based on the SRA Taxonomy Analysis Tool (STAT) that will take as input a fastq file and produce as output a fastq.clean file in which all reads identified as potentially of human origin are masked with 'N'.

You may also request that NCBI applies the HRRT to all SRA data linked to your submitted BioProject (more information below). When requested, all data previously submitted to the BioProject will be queued for scrubbing, and any future data submitted to the BioProject will be automatically scrubbed at load time.

This tool can be particularly helpful when a submission could be contaminated with human reads not consented for public display. Clinical pathogen and human metagenome samples are common submission types that benefit from applying the Human Scrubber tool.

For more information on genome data sharing policies, consult with institutional review boards and the NIH Genomic Data Sharing Policy. It is the responsibility of submitting parties to ensure that they have appropriate consent for human sequence data to be distributed publicly without access controls.

The usage message of the Human Scrubber tool also says that human reads are masked with N bases by default. [https://github.com/ncbi/sra-human-scrubber/blob/master/scripts/scrub.sh]

Wu et al. wrote: "Of the 384,096 contigs assembled by Megahit [9], the longest (30,474 nucleotides (nt)) had a high abundance and was closely related to a bat SARS-like coronavirus (CoV) isolate—bat SL-CoVZC45 (GenBank accession number MG772933)—that had previously been sampled in China, with a nucleotide identity of 89.1% (Supplementary Tables 1, 2)." Some Lankatards think that because there were about 400,000 contigs, it means that MEGAHIT produced about 400,000 candidate genomes for the virus but one of them was arbitrarily selected to be the genome of SARS2, and Lankatards also don't understand why Wu et al. selected the longest contig.

However the longest contig happened to be the contig for SARS2 by accident, and when I have ran MEGAHIT on other metagenomic samples which have contained a coronavirus, I have sometimes gotten bacterial contigs which were longer than the longest coronavirus contig. For example here where I ran MEGAHIT on a sample of bat shit that contained the bat sarbecovirus RmYN02, my longest contig was a contig for E. coli which was about 50,000 bases long, and the 2nd-longest and 3rd-longest contigs also matched E coli., but the contig for RmYN02 was only the 4th-longest contig:

$ curl 'https://www.ebi.ac.uk/ena/portal/api/filereport?accession=SRR12432009&result=read_run&fields=fastq_ftp'|sed 1d|cut -f2|tr \; \\n|sed s,^,ftp://,|xargs wget [...] $ megahit -1 SRR12432009_1.fastq.gz -2 SRR12432009_2.fastq.gz -o megarmyn02 [...] $ x='qseqid bitscore pident qcovs stitle qlen slen qstart qend sstart send';seqkit sort -lr megarmyn02/final.contigs.fa|seqkit head -n5|blastn -db nt -remote -num_alignments=1 -outfmt "6 $x">blast [...] $ (tr \ \\t<<<$x;awk '!a[$1]++' blast)|sed 's/, complete genome//'|column -ts$'\t' qseqid bitscore pident qcovs stitle qlen slen qstart qend sstart send k141_53690 92112 99.968 100 Escherichia coli strain Ec-RL2-1X chromosome 49929 4705802 1 49929 475668 425742 k141_64167 86149 100.000 100 Escherichia coli strain B chromosome 46651 4611841 1 46651 3954621 4001271 k141_22284 66979 100.000 100 Escherichia coli strain B chromosome 36270 4611841 1 36270 3501999 3465730 k141_29207 52181 99.996 100 Escherichia coli strain B chromosome 28260 4611841 1 28260 811981 840240

If Wu et al. would've had a larger number of reads, they might have also gotten bacterial contigs with a length over 30,474 bases, because then their additional reads might have filled in the gap between two contigs for different regions of a bacterial genome so that they would've been joined together into a single contig.

You can find out which contigs are viral by doing a BLAST search for each contig, like what Wu et al. did in their Supplementary Table 1. It shows that apart from the contig for SARS2, all of their other most abundant contigs were bacterial: [https://static-content.springer.com/esm/art%3A10.1038%2Fs41586-020-2008-3/MediaObjects/41586_2020_2008_MOESM1_ESM.pdf]

You can also find out which contigs are viral by trying to align all contigs against a FASTA file for virus reference sequences. For example here I ran MEGAHIT on Wu et al.'s raw reads. I got only 29,463 instead of 384,096 contigs because human reads were masked with N bases in the version of Wu et al.'s reads that were uploaded to the SRA:

$ brew install megahit -s # `-s` compiles from source because the bottle was compiled against GCC 9 so it gave me the error `dyld: Library not loaded: /usr/local/opt/gcc/lib/gcc/9/libgomp.1.dylib` [...] $ curl -s 'https://www.ebi.ac.uk/ena/portal/api/filereport?accession=SRR10971381&result=read_run&fields=fastq_ftp&format=tsv&download=true&limit=0'|sed 1d|cut -f2|tr \; \\n|sed s,^,ftp:,,|xargs wget -q $ megahit -1 SRR10971381_1.fastq.gz -2 SRR10971381_2.fastq.gz -o megawu [...] 2023-09-17 20:46:46 - 29463 contigs, total 14438186 bp, min 200 bp, max 29802 bp, avg 490 bp, N50 458 bp 2023-09-17 20:46:59 - ALL DONE. Time elapsed: 4545.123885 seconds

When I aligned all contigs against virus refseqs, I got only 8 aligned contigs. There was only contig for SARS2 because the contig covered almost the entire genome of the virus apart from a part of the 3' UTR so the genome was not split into multiple shorter contigs. There's 4 contigs which matched different parts of the human endogenous retrovirus K113, which is usually matched by human reads because the genomes of HERVs are incorporated as part of the human genome. There was also one short contig that matched a Streptococcus bacteriophage, and there were two short contigs which matched a parvovirus:

$ conda install -c bioconda bbmap

[...]

$ wget -q https://ftp.ncbi.nlm.nih.gov/refseq/release/viral/viral.1.1.genomic.fna.gz

$ seqkit fx2tab viral.1.1.genomic.fna.gz|sed $'s/A*\t$//'|seqkit tab2fx|bbmask.sh window=20 in=stdin out=viral.fa # bbmask masks low-complexity regions to avoid spurious matches

$ bowtie2-build --thread 3 viral.fa{}

[...]

$ bowtie2 -p3 --no-unal -x viral.fa -fU megawu/final.contigs.fa|samtools sort -@2 ->megawu.bam

$ x=megawu.bam;samtools coverage $x|awk \$4|cut -f1,3-6|(gsed -u '1s/$/\terr%\tname/;q';sort -rnk4|awk -F\\t -v OFS=\\t 'NR==FNR{a[$1]=$2;next}{print$0,sprintf("%.2f",a[$1])}' <(samtools view $x|awk -F\\t '{x=$3;n[x]++;len[x]+=length($10);sub(/.*NM:i:/,"");mis[x]+=$1}END{for(i in n)print i"\t"100*mis[i]/len[i]}') -|awk -F\\t 'NR==FNR{a[$1]=$2;next}{print$0"\t"a[$1]}' <(seqkit seq -n viral.fa|gsed 's/ /\t/;s/,.*//') -)|column -ts$'\t'

#rname endpos numreads covbases coverage err% name

NC_045512.2 29870 1 29801 99.769 0.01 Severe acute respiratory syndrome coronavirus 2 isolate Wuhan-Hu-1

NC_022518.1 9471 4 3435 36.2686 10.16 Human endogenous retrovirus K113 complete genome

NC_012756.1 31276 1 703 2.24773 4.69 Streptococcus phage PH10

NC_022089.1 3779 2 607 16.0625 3.29 Parvovirus NIH-CQV putative 15-kDa protein

The results above match the STAT results of the raw reads, which also include a handful of reads that are classified under parvoviruses and Pseudonomas phages.

MEGAHIT uses a default minimum length of 200 bases for contigs, but I only got two 200-base contigs. When I did a BLAST search for the first contig, it matched the human genome (because a small number of human reads remained in the raw reads at the SRA even though the vast majority of human reads were masked). And when I did a BLAST search for the other contig, it had a 100% match with about 80% coverage for ribosomal RNA sequences of Salmonella, Klebsiella, and some other bacteria:

$ seqkit seq -M 200 megawu/final.contigs.fa|seqkit seq -w0 >k141_7662 flag=0 multi=1.0000 len=200 GCAAGAGTATATTTGCCTAGCCTTGAGGATTTCGTTGGAAACGGGATTGTCTTCAGAGAA AATCTAGACAGAAGCATTCTCAGAAACTTCTTTGGGATGTTTGCATTCAAGTCACAGAGT AGAACATTCCCTTTGGTAGAGCAGGTTTGAAACACTCTTTTTTTAGTATATGGAAGTGGA CATTTGGAGCGCTTTCAGGC >k141_2082 flag=0 multi=1.0000 len=200 GGAAAGGGCGACGACGTACTTTCGGGTACGAGCATACACAGGTGCTGCATGGCTGTCGTC AGCTCGTGTTGTGAAATGTTGGGTTAAGTCCCGCAACGAGCGCAACCCTTATCCTTTGTT GCCAGCGGTCCGGCCGGGAACTCAAAGGAGACTGCCAGTGATAAACTGGAGGAAGGTGGG GATGACGTCAAGTCATCATG

There were two contigs that were exactly 1,000 bases long. The other contig matched Prevotella veroralis on BLAST, but there was no significant similarity found for the other contig:

$ seqkit seq -gM 1000 -m 1000 megawu/final.contigs.fa|seqkit seq -gw0 >k141_23439 flag=1 multi=15.0000 len=1000 GTGATGATACAAGGAACAGCAGAGAGCCGCATGGACGAGTCGAGCATGAATAATATCTATGTTCGCACAACTGCGGGCATGGCTCCAGTAAGTGAGTTCTGTACGTTGAAGCGTGTTTATGGACCGTCAAATATCACTCGTTTCAACCTCTTTACCTCTATTGCGGTTAATGCGACACCAGCAAACGGGGTCTCTTCTGGAGAGGCTATTAAGGCGGCAGAGGAAGTGGCAAAGCAAGTGTTACCACAGGGATATGGCTATGAGTTCTCTGGCTTGACACGTTCAGAGCAGGAGTCATCGAACTCAACAGCGATGATCTTCGTCCTTTGTATCGTGTTTGTTTACTTGATTCTTAGTGCACAGTATGAGAGTTATATCCTCCCATTAGCCGTTATCTTGTCAATACCATTCGGTCTTGCAGGTGCGTTCATATTCACGATGATCTTCGGACATAGCAACGATATCTACATGCAAATATCCCTGATTATGTTGATTGGATTGTTGGCAAAGAACGCCATCCTTATCGTTGAGTTTGCTCTCGAGCGTCGCCGAACAGGTATGGCAATCAAGTATGCAGCCATCTTAGGCGCTGGCGCACGTCTTCGTCCTATCCTTATGACGTCTTTGGCAATGGTTGTCGGATTGTTGCCATTGATGTTTGCAAGCGGTGTAGGTCATAATGGTAACCAGACATTGGGTGCTGCTGCCGTCGGAGGTATGTTGATTGGTACACTCTGTCAGGTGTTTGTTGTACCAGCTCTATTCGCTGGCTTCGAGTACTTACAAGAGCGTATTAAGCCTATCGAGTTTGAAGATGAGGCCAATCGCGACGTCATTAAGGAACTCGAGCAATACGCGAAAGGACCTGCACATGATTATAAGGTAGAAGAGTAGTACTATTTTAAAAGAACAATAATGAAGAATAAAAATATAATCATCATAGTCCTTGCAGCCCTGTCATTGACAGGCTGTAAGAGTCTCTATGGCAATTACGAGTTCT >k141_27896 flag=1 multi=8.0000 len=1000 TAAAGTTTCTCCGCATGATAAAAAAGCCTGGATTTAATGTTTTTGGAGTTATTAAAGAATTTAACAAAAAAGAAGATAAGGCGGCATTTGTTCCACCTGAATCAACAGTAAAAAAGTCTGAAACAAAGGTAGAAGACAAGAAAACTACGTAACCAAATAGATTCCAATAATGGAAGATTTAATAGGGGTTTTTAGTCGTTTGACCGGACAAAACGAGGATACTGTCAAGGAAGTATTAAAAGACCTTGAAGGCGAGAAATTTGCCAAAGCAGCTGAAAAGCTATTGAATGACAATTTCGGTAAAAAGTTAGCCGAAACAGAATCTAAAGCACGGATTGACGCAGAAAAGAAGCAGCGAAGCCTTATCCTTACTGAACAGGAAAAGAAACTAAAATCAAAGTACAGTATCGAATCATTCGATGACTTTGAAGACTTAGTAGAAAAGATCCACGAGTCAGGAAAGTTAAGTTCCGGTGCAGATGAAGTAAGCGTTAAAAAGATCGAGAAGCTCGAAAAGGACATTGAGAAGATAAAGCAATCTGAACAGACTATTAAAAGCGAATACGAAGCATTTAAAAGTACAACGGAACGCAATGTTTTATCAGGTTCAATCAGAAAGAGCGCACTTGAGTTTTTAGAGCAAAACAATTTCGTGGTAAGTGGTAAGCAAAGGGTAAATGATAATTTCATTGATGAGCTTTTGAATATCGCAGATTATGAGGTTGTGAACGGTGAATTTATTCCACTTATTAAAGGAACGAAAGAGAGGTTGATGGATGATGTAAGAAATCCTATATCCGCTCAAGACTTGTTTAAAAAGGTAGGAATGGATTACTACGATGTTAAAAATGGAAATGATAGAGAAACGGCAGGAGCGTTCAATACGAAGCCTACAGGAACAGGAGGTTCAAAATACAACATAAGTCAATCAAGACTTTAACAATGCAATAGCAGGGGCAAAGACTACAGAAGAACTTGATGTAATCGAAAAAGAATATCA

But basically many of these shorter contigs match different parts of bacterial genomes. And there's only a tiny number of contigs for viruses. And the original reads by Wu et al. also had a lot of human contigs because human reads had not been masked with N bases.

The sequencing runs at the SRA have been analyzed with a program called STAT, which stands for SRA Taxonomic Analysis Tool. STAT relies on matches to 32-base k-mers to classify each read under some node in a taxonomical tree, where it's able to classify some reads on the species-level but other reads are only classified at higher taxonomical levels. [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8450716/]

You can see a visual breakdown of the STAT results from SRA's website by going to the "Analysis" tab and clicking "Show Krona View". [https://trace.ncbi.nlm.nih.gov/Traces/?view=run_browser&acc=SRR10971381&display=analysis] It shows that about 61% of reads are unidentified, about 33% of reads are classified under bacteria out of which about half are classified under Prevotella, about 5% of reads are classified under eukaryotes, and about 0.2% of reads are classified under viruses:

The 4 most abundant leaf nodes in the STAT tree are all different species of Prevotella, followed by SARS2 on the 5th place, and then followed by other species of bacteria, humans, and other hominins from misclassified human reads:

$ curl 'https://trace.ncbi.nlm.nih.gov/Traces/sra-db-be/run_taxonomy?cluster_name=public&acc=SRR10971381'>SRR10971381.stat $ jq -r '[.[]|.tax_table[]|.parent]as$par|[.[]|.tax_table[]|select(.tax_id as$x|$par|index($x)|not)]|sort_by(-.total_count)[]|((.total_count|tostring)+";"+.org)' SRR10971381.stat|head -n30 171042;Prevotella salivae F0493 (Prevotella are common in the human oral cavity) 155602;Prevotella veroralis F0319 89078;Prevotella scopos JCM 17725 57886;Prevotella melaninogenica D18 54238;Severe acute respiratory syndrome coronavirus 2 51814;Leptotrichia sp. oral taxon 212 (Leptotrichia is a common genus of bacteria in human oral cavity) 41272;Prevotella nanceiensis DSM 19126 = JCM 15639 33716;Homo sapiens 28813;Prevotella veroralis DSM 19559 = JCM 6290 23601;Leptotrichia hongkongensis 14350;Prevotella pallens 12403;Prevotella sp. F0091 9451;Prevotella sp. C561 9261;Prevotella jejuni 8531;Prevotella histicola JCM 15637 = DNF00424 7944;Prevotella sp. oral taxon 313 7612;Prevotella sp. oral taxon 299 str. F0039 7356;Capnocytophaga gingivalis ATCC 33624 (common oral bacteria) 6631;Pan troglodytes 6391;Prevotella loescheii DSM 19665 = JCM 12249 = ATCC 15930 6229;Klebsiella pneumoniae (found in normal flora of the mouth, skin, and intestines) 6104;Veillonella parvula ATCC 17745 (normal part of oral flora) 5389;Prevotella sp. oral taxon 472 str. F0295 5251;Pongo abelii (Sumatran orangutan; misclassified human reads) 5059;Clostridioides difficile (intestinal bacteria which cause of diarrhea and colitis) 4810;Selenomonas sputigena ATCC 35185 (bacteria found in the upper respiratory tract) 4492;Acinetobacter baumannii (common in hospital-derived infections; almost exclusively isolated from hospital environments) 4020;Porphyromonas sp. oral taxon 278 str. W7784 3793;Gorilla gorilla gorilla 3791;Prevotella sp. ICM33

Almost all viral leaf nodes were classified under SARS2, but there were also about 700 reads classified under a CRESS virus which might be some kind of spurious matches, and there were a few reads for a parvovirus and a Pseudonomas phage (which both also got short contigs in my MEGAHIT run):

$ jq -r '.[]|.tax_table[]|[.org,.total_count,.tax_id,.parent]|@tsv' SRR10971381.stat|awk -F\\t '{out[$3]=$0;parent[$3]=$4;name[$3]=$1;nonleaf[$4]}END{for(i in out){j=parent[i];chain="";do{chain=(chain?chain":":"")name[j];j=parent[j]}while(j!="");print out[i]"\t"!(i in nonleaf)"\t"chain}}'|sort -nr|grep Virus|sort -t$'\t' -rnk2|awk -F\\t '$5{print$2 FS$1" - "$6}'|head -n20|column -ts$'\t'

54238 Severe acute respiratory syndrome coronavirus 2 - Severe acute respiratory syndrome-related coronavirus:Sarbecovirus:Betacoronavirus:Orthocoronavirinae:Coronaviridae:Cornidovirineae:Nidovirales:Riboviria:Viruses

693 CRESS virus sp. - CRESS viruses:unclassified ssDNA viruses:unclassified DNA viruses:unclassified viruses:Viruses

159 uncultured virus - environmental samples:Viruses

20 Parvovirus NIH-CQV - unclassified Parvovirinae:Parvovirinae:Parvoviridae:Viruses

15 Curvibacter phage P26059B - unclassified Autographivirinae:Autographivirinae:Podoviridae:Caudovirales:Viruses

13 Pseudomonas phage HU1 - unclassified Podoviridae:Podoviridae:Caudovirales:Viruses

12 Bat coronavirus RaTG13 - Severe acute respiratory syndrome-related coronavirus:Sarbecovirus:Betacoronavirus:Orthocoronavirinae:Coronaviridae:Cornidovirineae:Nidovirales:Riboviria:Viruses

9 Iodobacter phage PhiPLPE - Iodobacter virus PLPE:Iodovirus:Myoviridae:Caudovirales:Viruses

9 Bat SARS-like coronavirus - Severe acute respiratory syndrome-related coronavirus:Sarbecovirus:Betacoronavirus:Orthocoronavirinae:Coronaviridae:Cornidovirineae:Nidovirales:Riboviria:Viruses

8 Rhodoferax phage P26218 - unclassified Podoviridae:Podoviridae:Caudovirales:Viruses

8 Prokaryotic dsDNA virus sp. - unclassified dsDNA viruses:unclassified DNA viruses:unclassified viruses:Viruses

8 Pangolin coronavirus - unclassified Betacoronavirus:Betacoronavirus:Orthocoronavirinae:Coronaviridae:Cornidovirineae:Nidovirales:Riboviria:Viruses

5 uncultured cyanophage - environmental samples:unclassified bacterial viruses:unclassified viruses:Viruses

5 Rimavirus - Siphoviridae:Caudovirales:Viruses

4 Genomoviridae sp. - unclassified Genomoviridae:Genomoviridae:Viruses

3 Streptococcus phage Javan363 - unclassified Siphoviridae:Siphoviridae:Caudovirales:Viruses

3 Sewage-associated circular DNA virus-14 - unclassified viruses:Viruses

3 RDHV-like virus SF1 - unclassified viruses:Viruses

3 Paramecium bursaria Chlorella virus NW665.2 - unclassified Chlorovirus:Chlorovirus:Phycodnaviridae:Viruses

3 Microviridae sp. - unclassified Microviridae:Microviridae:Viruses

Trinity is a lot slower than MEGAHIT, and it requires more RAM and

more free disk space, so the first few times when I ran it on my local

computer, I either ran out of disk space, or I ran out of RAM so it had

to use swap and I terminated the run. I think it required around 20-30

GB RAM and around 50 GB disk space to assemble Wu et al.'s reads. The

--max_memory flag which specifies a maximum limit for RAM

and swap use cannot be omitted, and one of the first times I ran Trinity

I think it was interrupted in the middle of the run because it exceeded

the --max_memory limit. Running Trinity took about 24 hours

on a server with 16 cores:

trinity --seqType fq --left SRR10971381_1.fastq --right SRR10971381_2.fastq --max_memory 50G --CPU 16 --output trinityout

The contigs produced by Trinity can be downloaded from here: f/Trinity.fasta.gz.

I got a total of only 159,532 contigs even though Wu et al. wrote that they got 1,329,960 contigs with Trinity, but it's probably because I used the reads that are publicly available at the SRA where almost all human reads are masked, so I got only a small number of human contigs.

My Trinity run produced six contigs for SARS2 but the longest contig is 29,869 bases even though Wu et al.'s longest contig was only 11,760 bases. It might be because I didn't trim the reads before I ran Trinity, or because human reads were masked in my run but not in Wu et al.'s run, or because I used a different version of Trinity than Wu et al.:

$ wget https://sars2.net/f/Trinity.fasta.gz

$ seqkit stat Trinity.fasta.gz

file format type num_seqs sum_len min_len avg_len max_len

Trinity.fasta.gz FASTA DNA 159,532 46,995,710 186 294.6 29,879

$ curl 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN908947.3'>sars2.fa

$ bowtie2-build sars2.fa{,}

[...]

$ bowtie2 -p4 --no-unal -x sars2.fa -fU Trinity.fasta.gz|samtools sort ->trinity.bam

159532 reads; of these:

159532 (100.00%) were unpaired; of these:

159526 (100.00%) aligned 0 times

6 (0.00%) aligned exactly 1 time

0 (0.00%) aligned >1 times

0.00% overall alignment rate

$ samtools view trinity.bam|ruby -ane'l=$F[9].length;s=$F[3].to_i;e=s+l-$F[5].scan(/\d+(?=I)/).map(&:to_i).sum-1;puts [$F[0],s,e,l,$F.grep(/NM:i/)[0][/\d+/],$F[5]]*" "'|(echo contig_name start end length mismatches cigar_string;cat)|column -t

contig_name start end length mismatches cigar_string

TRINITY_DN1442_c18_g1_i2 1 29874 29879 10 4M5I29870M

TRINITY_DN1442_c18_g1_i4 77 26478 26402 4 21432M1D4970M

TRINITY_DN131_c1_g1_i1 19825 20128 306 17 299M2I5M

TRINITY_DN1442_c18_g1_i7 21531 26479 4998 54 7M5I3M5I3M4I8M35I4928M

TRINITY_DN1442_c18_g1_i1 26425 29871 3476 41 4M2I10M2D2M1I5M5I2M11I7M1D5M8I4M2I3408M

TRINITY_DN8024_c1_g1_i1 28394 28626 233 9 233M

If my longest contig is compared to the third version of Wuhan-Hu-1, the only differences are that my contig has 5 extra nucleotides at the start, one nucleotide change in the middle, and it's missing the last 29 bases of the poly(A) tail:

$ R -e 'if(!require("BiocManager"))install.packages("BiocManager");BiocManager::install("Biostrings")'

[...]

$ aldi2()(cat "$@">/tmp/aldi;Rscript --no-init-file -e 'm=t(as.matrix(Biostrings::readDNAStringSet("/tmp/aldi")));diff=which(rowSums(m!=m[,1])>0);split(diff,cumsum(diff(c(-Inf,diff))!=1))|>sapply(\(x)c(if(length(x)==1)x else paste0(x[1],"-",tail(x,1)),apply(m[x,,drop=F],2,paste,collapse="")))|>t()|>write.table(quote=F,sep=";",row.names=F)')

$ seqkit sort -lr Trinity.fasta.gz|seqkit head -n1|cat - sars2.fa|mafft --thread 4 -|aldi2

[...]

;TRINITY_DN1442_c18_g1_i2 len=29879 path=[0:0-79 1:80-80 2:81-21515 4:21516-21544 5:21545-21565 6:21566-21568 7:21569-21569 8:21570-26483 9:26484-29878];NC_045512.2 Severe acute respiratory syndrome coronavirus 2 isolate Wuhan-Hu-1, complete genome

1-5;CGGGG;-----

21666;G;A

29880-29908;-----------------------------;AAAAAAAAAAAAAAAAAAAAAAAAAAAAA

The reason why the longest contig is shown to have 10 mismatches in the BAM file is that Bowtie2 doesn't allow gaps within the first 4 bases of the read by default, so it treated the first 4 bases as mismatches and it added a 5-base insert after them:

$ bowtie2 -h|grep gbar --gbar <int> disallow gaps within <int> nucs of read extremes (4)

I don't know why Trinity produced 6 different contigs without merging them into a single contig, even though the longest contig covered the entire genome apart from the poly(A) tail. However it could be because the reads were not trimmed properly so there were mismatches in the middle of the contigs.

Out of my 6 contigs, the shortest 233-base contig matched positions 28,394 to 28,626 of Wuhan-Hu-1. It has 3 wrong bases at the start of the contig, but it might be because many reads have 3-5 bases of extra crap at the ends but I didn't trim the ends of reads. The shortest contig also has 6 errors where a T base was changed to an A base, which may have been because A bases have the lowest average quality and I didn't trim low-quality bases from the ends of reads:

$ seqkit grep -p TRINITY_DN8024_c1_g1_i1 Trinity.fasta.gz|seqkit seq -rp|cat - sars2.fa|mafft --thread 4 --clustalout -

[...]

TRINITY_DN8024_ -------------gtaccccaaggtttacccaataatactgcgtcttggttcaccgctct

NC_045512.2 atcaaaacaacgtcggccccaaggtttacccaataatactgcgtcttggttcaccgctct

.********************************************

TRINITY_DN8024_ cacacaacatggcaaggaagaccttaaattccctcgaggacaaggcgttccaattaacac

NC_045512.2 cactcaacatggcaaggaagaccttaaattccctcgaggacaaggcgttccaattaacac

*** ********************************************************

TRINITY_DN8024_ caaaagcagtccagatgaccaaataggctactaccgaagagctaccagacgaaatcgtgg

NC_045512.2 caatagcagtccagatgaccaaattggctactaccgaagagctaccagacgaattcgtgg

*** ******************** **************************** ******

TRINITY_DN8024_ tggtgacggaaaaatgaaagatctcagtccaagaaggtatttctactacctaggaactgg

NC_045512.2 tggtgacggtaaaatgaaagatctcagtccaagatggtatttctactacctaggaactgg

********* ************************ *************************

TRINITY_DN8024_ gccaga------------------------------------------------------

NC_045512.2 gccagaagctggacttccctatggtgctaacaaagacggcatcatatgggttgcaactga

******

[...]

When I ran Trinity a second time so that I trimmed the reads with

fastp, I got 109,806 contigs with a maximum length of

31,241. Now only two contigs aligned against SARS2, but the longer

contig was 31,241 bases long because it had a 1,379-base insertion in

the middle, and the shorter contig also had the same 1,379-base

insertion in the middle:

$ fastp -f 5 -t 5 -53 -i SRR10971381_1.fastq.gz -I SRR10971381_2.fastq.gz -o SRR10971381_1.trim.fq -O SRR10971381_2.trim.fq [...] $ trinity --seqType fq --left SRR10971381_1.trim.fq --right SRR10971381_2.trim.fq --max_memory 30G --CPU 16 --output trinityout2 [...] $ bowtie2 -p4 --no-unal -x sars2.fa -fU Downloads/trinityout2.Trinity.fasta.gz|samtools sort ->trinity2.bam [...] $ samtools view trinity2.bam|ruby -ane'l=$F[9].length;s=$F[3].to_i;e=s+l-$F[5].scan(/\d+(?=I)/).map(&:to_i).sum-1;puts [$F[0],s,e,l,$F.grep(/NM:i/)[0][/\d+/],$F[5]]*" "'|(echo contig_name start end length mismatches cigar_string;cat)|column -t contig_name start end length mismatches cigar_string TRINITY_DN39043_c0_g1_i2 4 29865 31241 1379 27790M1379I2072M TRINITY_DN39043_c0_g1_i1 21487 29852 9755 1413 6M4D5M1I3M3I2M3I28M1I2M2I6M9D6242M1379I2072M

When I did a BLAST search for the insert, it started with a 64-base segment which was identical to bases 29,109-29,172 of Wuhan-Hu-1 which was followed by a 1,315-base segment which was identical to bases 27,794-29,108 of Wuhan-Hu-1. So even though the starting position of the first segment was one base bigger than the ending position of the second segment, for some reason the order of the two segments was flipped in the insert.

Mark Bailey wrote: "As has been noted, 'bat SL-CoVZC45' was an in silico genome, 29,802 nucleotides in length, invented in 2018, that was used by Fan Wu et al. as a template genome for the invention of the SARS-CoV-2 genome." [https://drsambailey.substack.com/p/a-farewell-to-virology-expert-edition]

His misconception is based on a paragraph of the paper by Wu et al. said: "The viral genome organization of WHCV was determined by sequence alignment to two representative members of the genus Betacoronavirus: a coronavirus associated with humans (SARS-CoV Tor2, GenBank accession number AY274119) and a coronavirus associated with bats (bat SL-CoVZC45, GenBank accession number MG772933). The un-translational regions and open-reading frame (ORF) of WHCV were mapped on the basis of this sequence alignment and ORF prediction. The WHCV viral genome was similar to these two coronaviruses (Fig. (Fig.11 and Supplementary Table 3). The order of genes (5' to 3') was as follows: replicase ORF1ab, spike (S), envelope (E), membrane (M) and nucleocapsid (N)." [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7094943/]

However the authors meant that after they had already assembled the complete genome of SARS2, they aligned it against the genomes of SARS1 and ZC45 in order to annotate the proteins corresponding to each ORF. They didn't mean that they used SARS1 as a reference genome when they assembled the raw reads.

You can try running NCBI's ORFfinder on the genome of SARS2. Go here,

enter NC_045512 as the accession number, enable the option

to ignore nested ORFs, and click submit:

https://www.ncbi.nlm.nih.gov/orffinder/. It shows

that the third ORF on the positive strand starts at position 21,536 and

ends at position 25,384:

In order to know what name you should give to the protein coded by the ORF, you can check which protein SARS1 has around the same region. But it happens to be the spike protein:

$ curl -s 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta_cds_aa&id=NC_004718'|grep \>|sed 's/.*protein=//;s/\].*location=/: /;s/\].*//' ORF1ab polyprotein: join(265..13392,13392..21485) ORF1a polyprotein: 265..13413 spike glycoprotein: 21492..25259 ORF3a protein: 25268..26092 ORF3b protein: 25689..26153 small envelope protein: 26117..26347 membrane glycoprotein M: 26398..27063 ORF6 protein: 27074..27265 ORF7a protein: 27273..27641 ORF7b protein: 27638..27772 ORF8a protein: 27779..27898 ORF8b protein: 27864..28118 nucleocapsid protein: 28120..29388 ORF9b protein: 28130..28426 ORF9a protein: 28583..28795

But for example the ORF6 of Wuhan-Hu-1 starts at position 27,202, so if you simply looked at which ORF in SARS1 is the closest to it but you didn't align the sequences, it would be ORF7a. But if you align the sequences, then the ORF6 of SARS2 and SARS1 will have the same starting position so it's easier to do the annotation:

$ curl 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=NC_004718,NC_045512'>sars12.fa $ mafft --quiet sars12.fa>sars12.aln $ seqkit head -n1 sars12.fa|seqkit subseq -r27074:27100|seqkit locate -if- sars12.aln|sed 1d|cut -f5,6|seqkit subseq -r $(tr \\t :) sars12.aln >NC_004718.3 SARS coronavirus Tor2, complete genome atgtttcatcttgttgacttccaggtt >NC_045512.2 Severe acute respiratory syndrome coronavirus 2 isolate Wuhan-Hu-1, complete genome atgtttcatctcgttgactttcaggtt

The website of the NCBI's sequence read archive has a tab which shows a breakdown of what percentage of reads are estimated to come from different organisms and different taxonomial nodes. The tab displays the results a program called SRA Taxonomy Analysis Tool (STAT), which searches for 32-base k-mers among the reads which match a set of reference sequences. A database which contains the number of reads which match each taxonomical node for each SRA run is currently about 500 GB in size, but you can search it through BigQuery at the Google Cloud Platform. [https://www.ncbi.nlm.nih.gov/sra/docs/sra-cloud-based-taxonomy-analysis-table/] I tried using BigQuery to search for the oldest sequencing runs which matched over 10,000 reads of SARS2 (taxid2697049):

select * from `nih-sra-datastore.sra.metadata` as m, `nih-sra-datastore.sra_tax_analysis_tool.tax_analysis` as tax where m.acc=tax.acc and tax_id=2697049 and total_count>10000 order by releasedate

I got 17 results from before 2020 but all of them had zero reads which aligned against Wuhan-Hu-1 when I used Bowtie2 with the default settings. The oldest results I found which had reads that aligned against SARS2 were runs for the samples WHU01 and WHU02 which were submitted by Wuhan University and published on January 18th 2020 (in an unknown timezone). [https://www.ncbi.nlm.nih.gov/sra/?term=SRR10903402] When I ran MEGAHIT on the reads of the WHU01 sample that I didn't do any trimming, my longest contig was 17,699 bases long, and it was identical to positions 12197-29895 of Wuhan-Hu-1:

wget ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR109/002/SRR10903402/SRR10903402_{1,2}.fastq.gz # download from ENA because downloading from SRA is too slow

curl 'https://eutils.ncbi.nlm.nih.gov/entrez/eutils/efetch.fcgi?db=nuccore&rettype=fasta&id=MN908947.3'>sars2.fa

megahit -1 SRR10903402_1.fastq.gz -2 SRR10903402_2.fastq.gz -o megawuhanjan

seqkit sort -lr megawuhanjan/final.contigs.fa|seqkit head -n1|cat - sars2.fa|mafft --clustalout -

When I trimmed the reads with fastp so that I used the

default settings apart from a longer minimum length, almost half of the

read pairs got an adapter sequence trimmed, so the adapter sequences may

have earlier prevented assembling a longer contig. Now my longest contig

was 29,809 bases long, and it missed the first 76 bases and last 19

bases of Wuhan-Hu-1 (which should demonstrate that it's common for

MEGAHIT contigs to miss pieces from the start or end of the genome):

$ fastp -i SRR10903402_1.fastq.gz -I SRR10903402_2.fastq.gz -o SRR10903402_1.trim.fq.gz -O SRR10903402_2.trim.fq.gz

Read1 before filtering:

total reads: 676694

total bases: 101889418

[...]

Filtering result:

reads passed filter: 1126442

reads failed due to low quality: 193854

reads failed due to too many N: 0

reads failed due to too short: 33092

reads with adapter trimmed: 287572

bases trimmed due to adapters: 12910527

[...]

$ megahit -1 SRR10903402_1.trim.fq.gz -2 SRR10903402_2.trim.fq.gz -o megawuhanjan3

[...]

2023-05-20 14:22:39 - 425 contigs, total 246369 bp, min 286 bp, max 29809 bp, avg 579 bp, N50 540 bp

2023-05-20 14:22:39 - ALL DONE. Time elapsed: 72.962219 seconds

$ seqkit sort -lr megawuhanjan3/final.contigs.fa|seqkit head -n1|cat sars2.fa -|mafft ->temp.aln

[...]